Explore the Application

Model management

The Model Management page helps end-users create ML models, track associated backend jobs, and monitor model performance once models have been trained.

There are 3 sub-pages on the Model Management page: Model Setup, Jobs, and Model Ops

Below, you can see a grid table of all

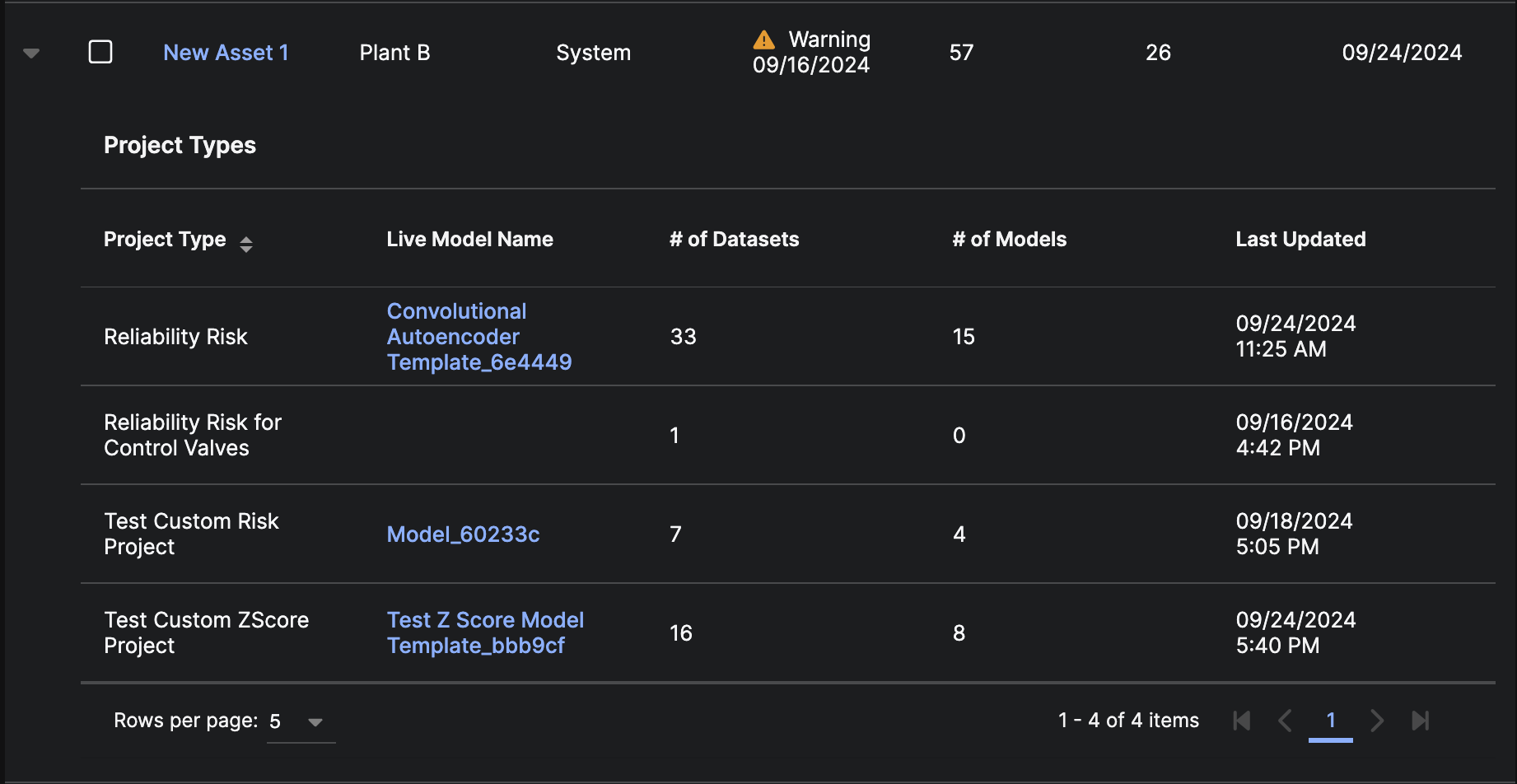

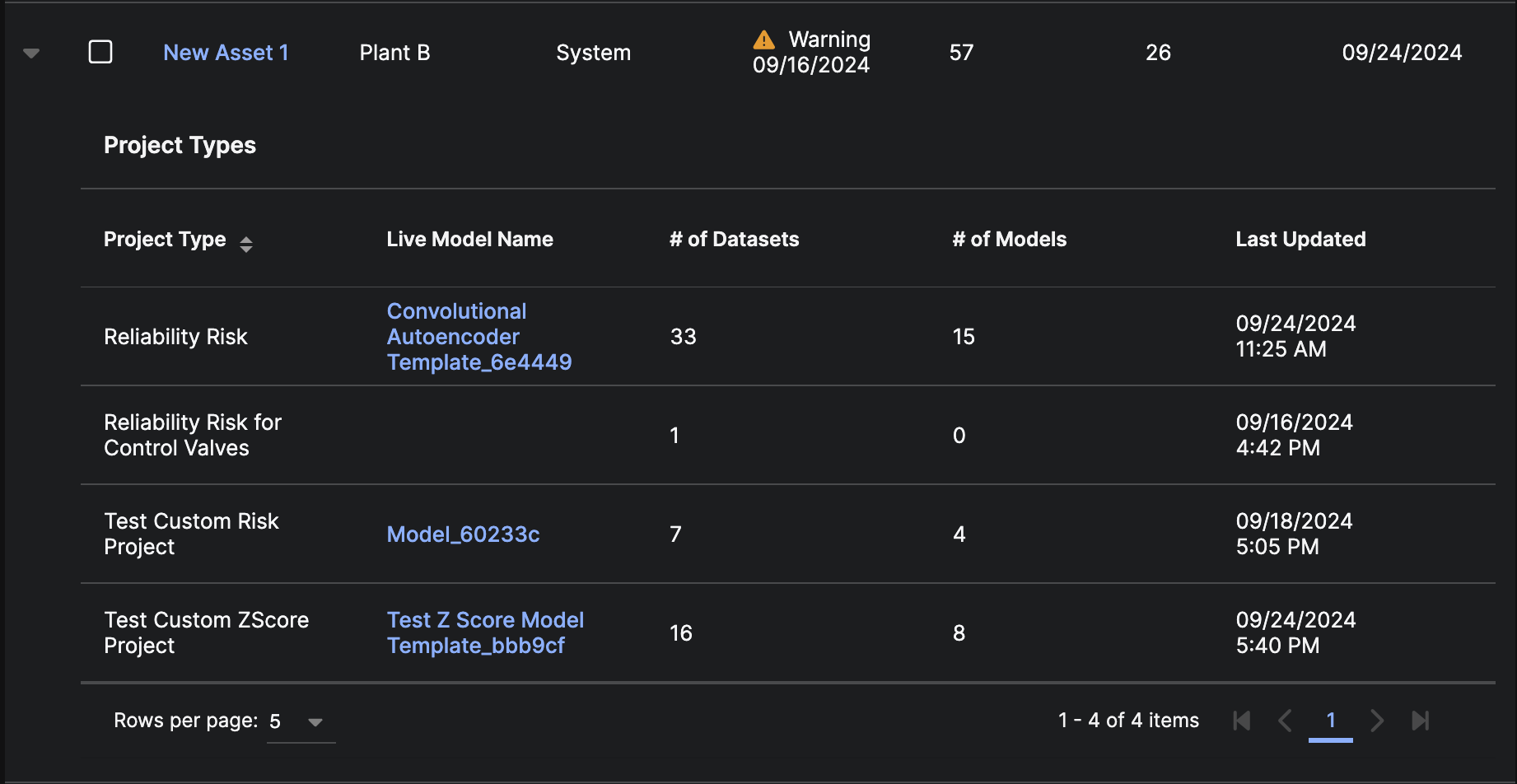

Below, you can see a grid table of all  You can also click on the arrow next to an asset’s name to expand and view a nested grid of all the machine learning Project Types currently set up on the asset. Here, you can view information about each Project Type, including the Project Type’s live model, total datasets, total models, and when the Project Type was last updated.

You can also click on the arrow next to an asset’s name to expand and view a nested grid of all the machine learning Project Types currently set up on the asset. Here, you can view information about each Project Type, including the Project Type’s live model, total datasets, total models, and when the Project Type was last updated.

The Dataset Preparation Jobs grid shows all the Dataset Preparation jobs for the deployment. Each row in the grid can be expanded to show the one or more prepared datasets that are part of the job. From a set of completed datasets, you can:

The Dataset Preparation Jobs grid shows all the Dataset Preparation jobs for the deployment. Each row in the grid can be expanded to show the one or more prepared datasets that are part of the job. From a set of completed datasets, you can:

The Model Configuration Jobs grid shows all the Model Configuration jobs for the deployment. Each row in the grid can be expanded to show the one or more Trained Models that are part of the job.

The Model Configuration Jobs grid shows all the Model Configuration jobs for the deployment. Each row in the grid can be expanded to show the one or more Trained Models that are part of the job.

Below, you can see a grid table of all

Below, you can see a grid table of all  Below, you can see a grid table of all

Below, you can see a grid table of all

The Overview tab consists of:

The Overview tab consists of:

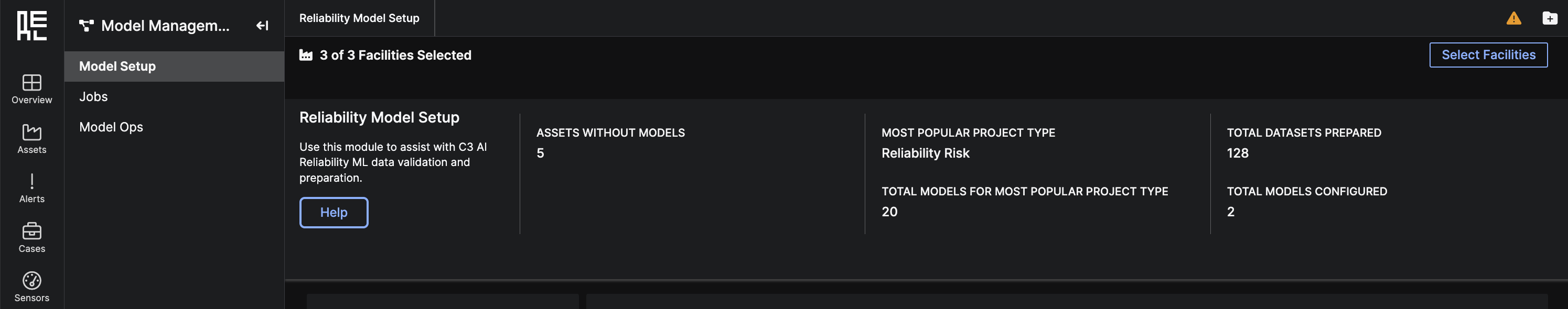

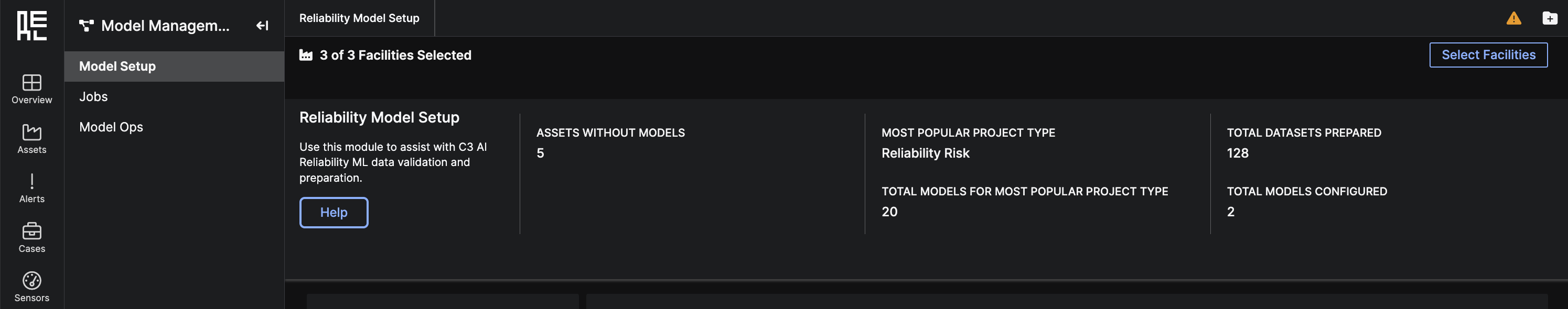

Model Setup Sub-Page

The Model Setup Sub-Page is a module to help users create and train ML models for C3 AI Reliability. This module provides entry points to 3 specific workflows that aid the user through the model creation process: Data Validation, Dataset Preparation, and Model Configuration. Please note that Data Validation can be performed at the asset level, but Dataset Preparation and Model Configuration need to be performed for each type of ML Model that you may choose to set up.Model Setup - Asset Detail

At the top of the Model Setup page, there are roll-up summaries of the following metrics across all assets:- Assets Without Models

- Most Popular Project Type

- Total Models For Most Popular Project Type

- Total Datasets Prepared

- Total Models Configured

Help button to view a sidepanel explaining more about the key steps to set up a model.

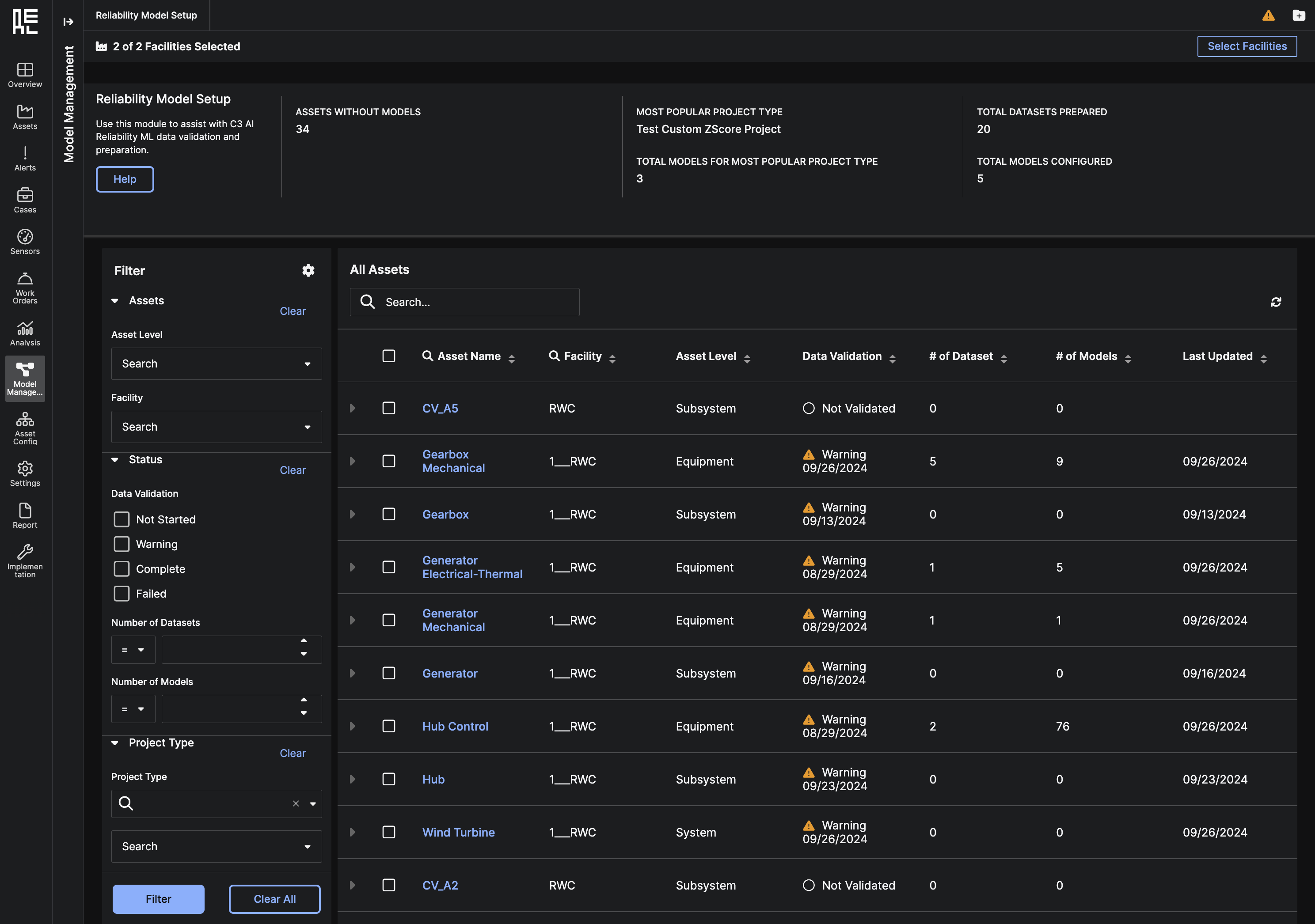

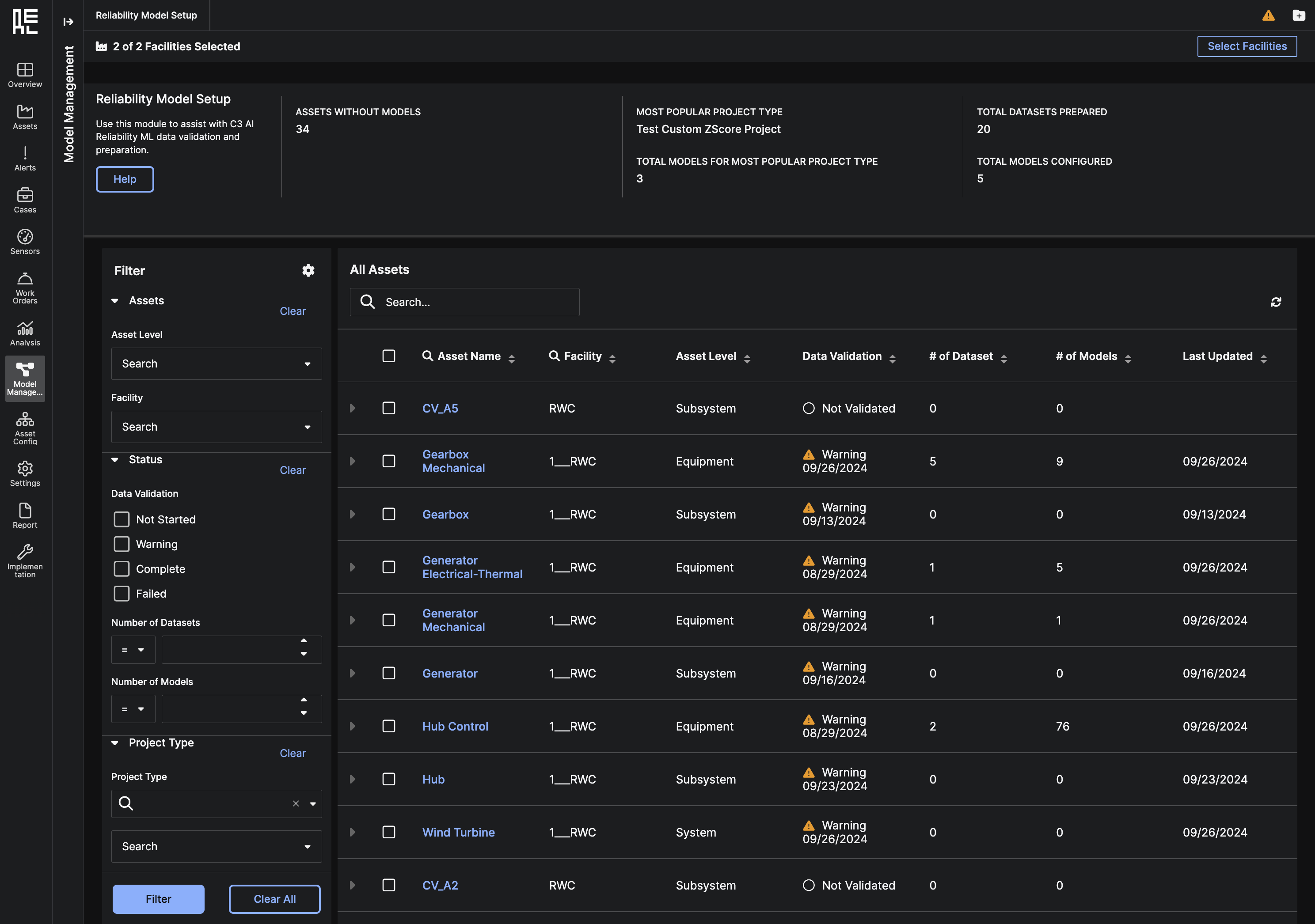

Below, you can see a grid table of all

Below, you can see a grid table of all Assets that are loaded in the application. From the table, you can:

- Search for a specific

Assetusing keywords. - Select multiple

Assetsusing the checkboxes and runData Validation,Dataset Preparation, orModel Configurationin bulk using the table actions. - Click on the

Asset Nameto redirect to the Model Setup Asset Details Page.

You can also click on the arrow next to an asset’s name to expand and view a nested grid of all the machine learning Project Types currently set up on the asset. Here, you can view information about each Project Type, including the Project Type’s live model, total datasets, total models, and when the Project Type was last updated.

You can also click on the arrow next to an asset’s name to expand and view a nested grid of all the machine learning Project Types currently set up on the asset. Here, you can view information about each Project Type, including the Project Type’s live model, total datasets, total models, and when the Project Type was last updated.

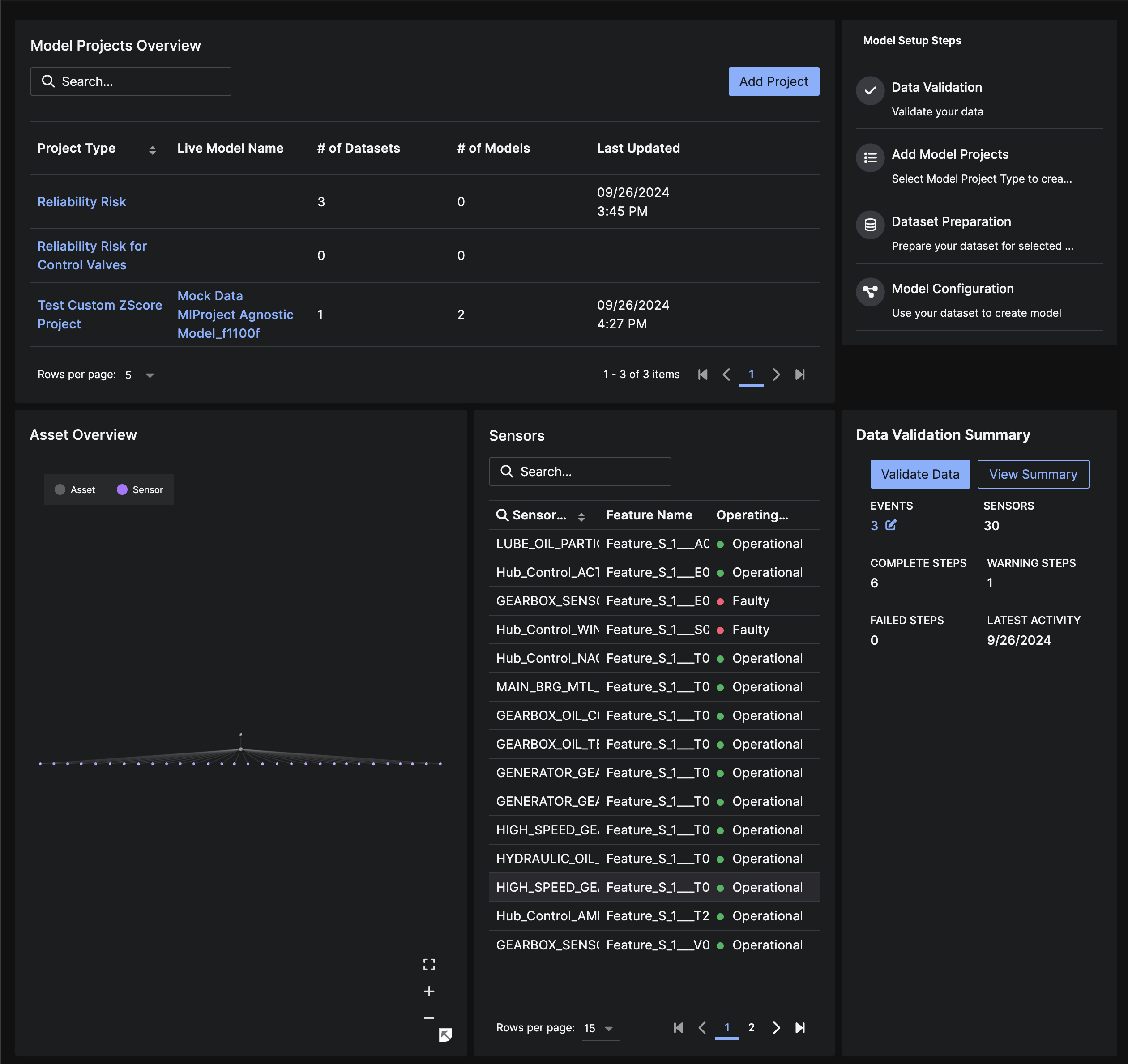

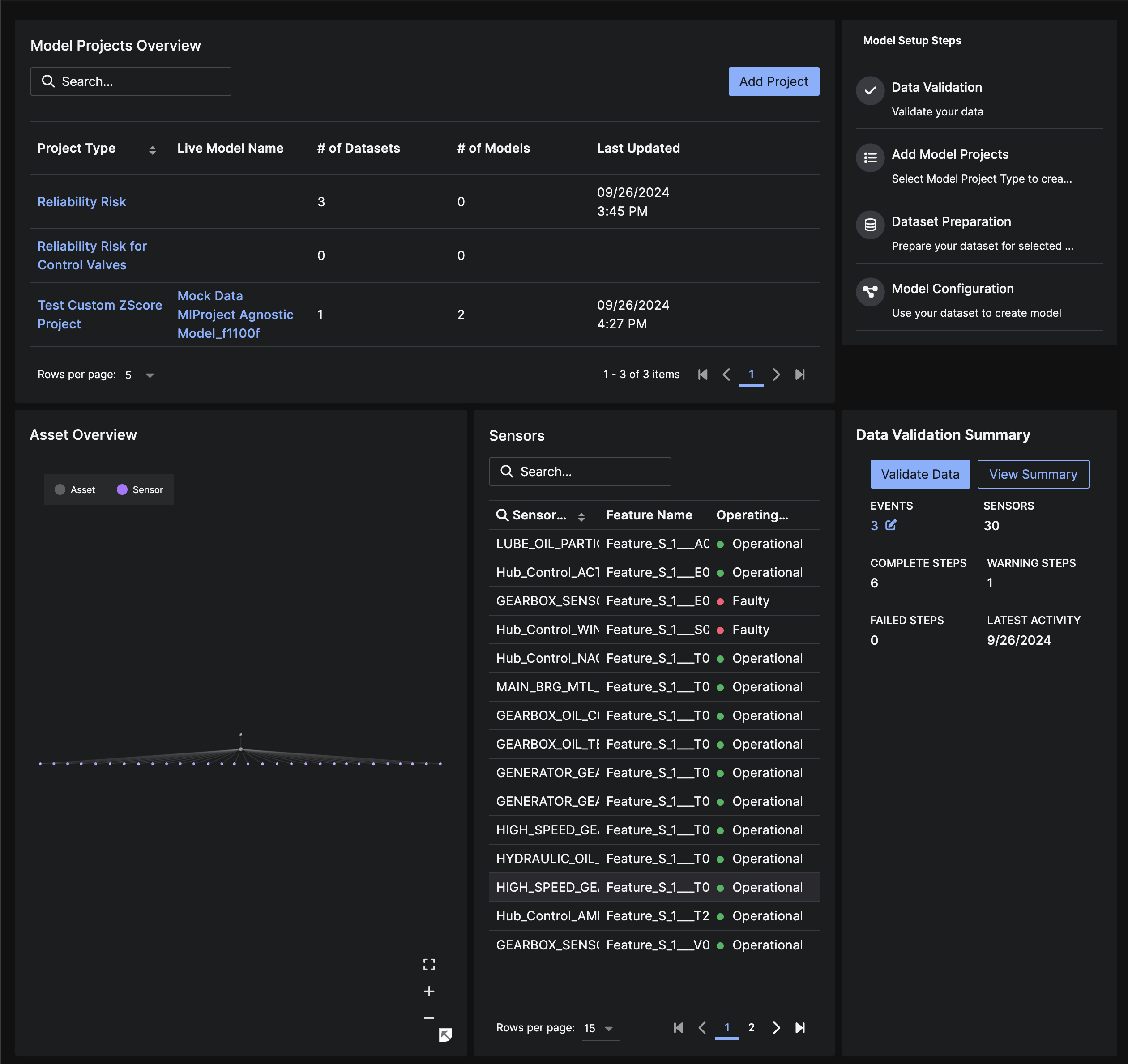

Model Setup Asset Detail

The Model Setup Asset Detail page is a home page to view all model setup activity for a specific asset. This page consists of:- Model Projects Overview grid to provide high-level information about each Project Type and its modeling status. This grid provides entry points to viewing the Project Detail page or beginning Dataset Preparation or Model Configuration using the Project Type.

- Model Setup Steps grid as a reference for the key steps that must be taken to set up a model. Clicking on this grid will open a side-panel with additional information about each step.

- Asset Overview graph showing the asset and any connected sensors.

- Sensors grid showing all the sensors connected to the asset, corresponding feature names, and operational statuses.

- Data Validation Summary card showing the summary of the latest Data Validation run. This card contains entry points to running Data Validation, reviewing the Data Validation Summary, and editing Events that may exist in this asset’s history.

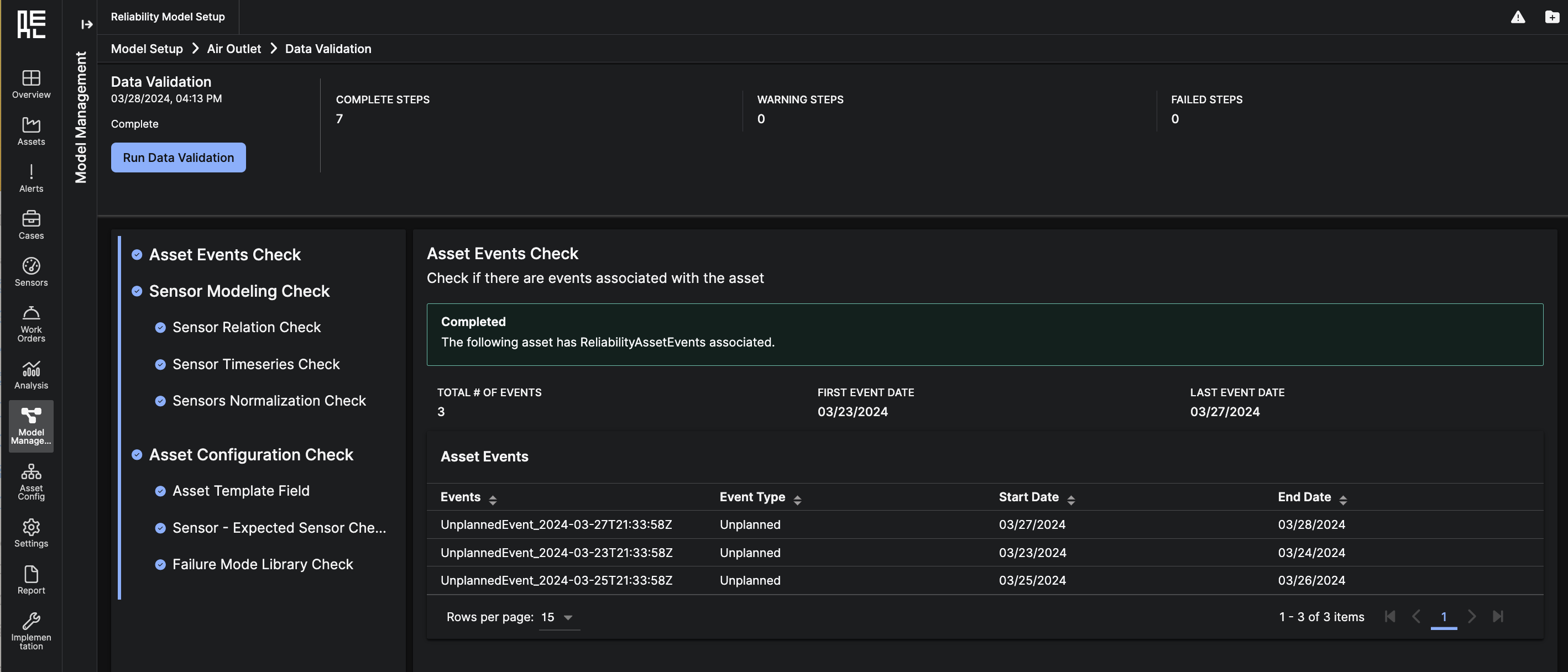

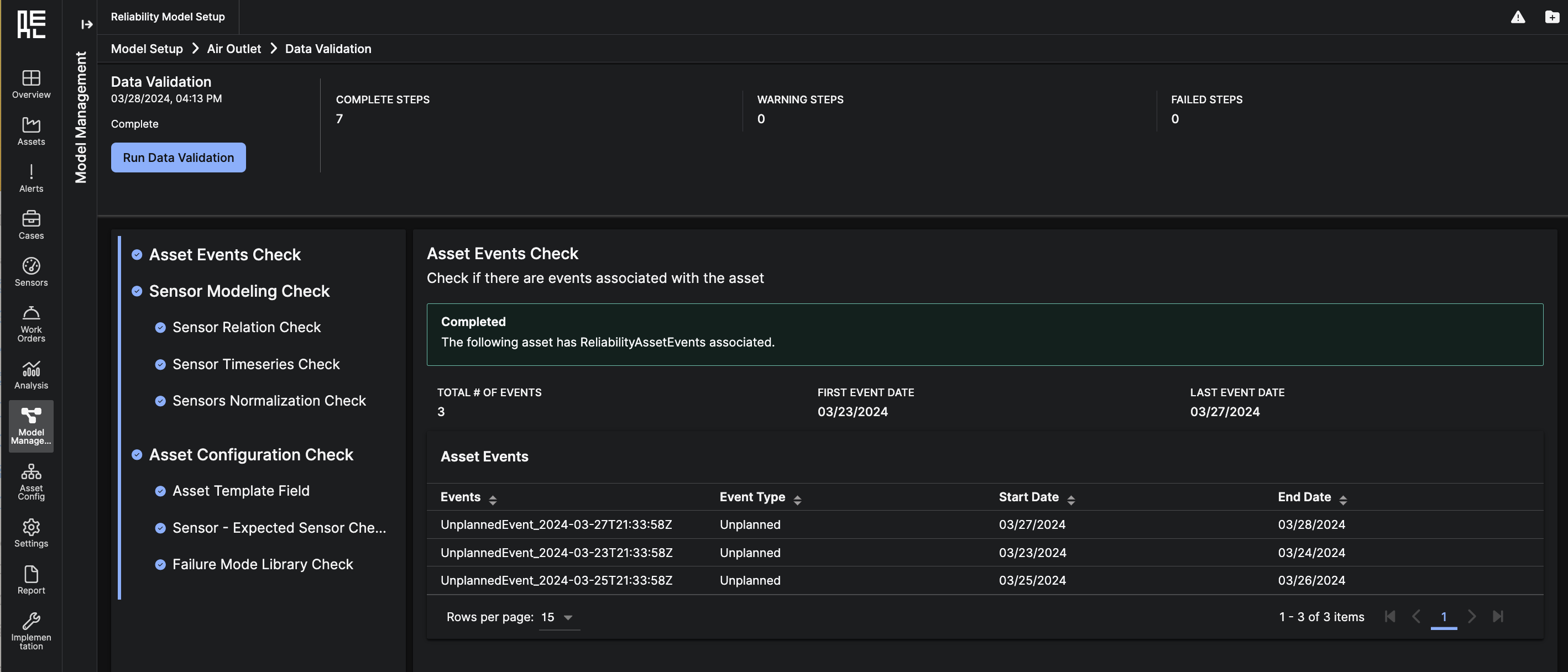

Data Validation

The Data Validation summary page provides an overview of the various data and data model checks that were performed during a Data Validation run. You can click through the different sections on the left side of the summary to get more information about the individual checks. You can also investigate any checks that may have “failed” or have “warning” statuses – the sub-pages provide additional information about the cause of the “failed” or “warning” state and advise users about relevant next steps.

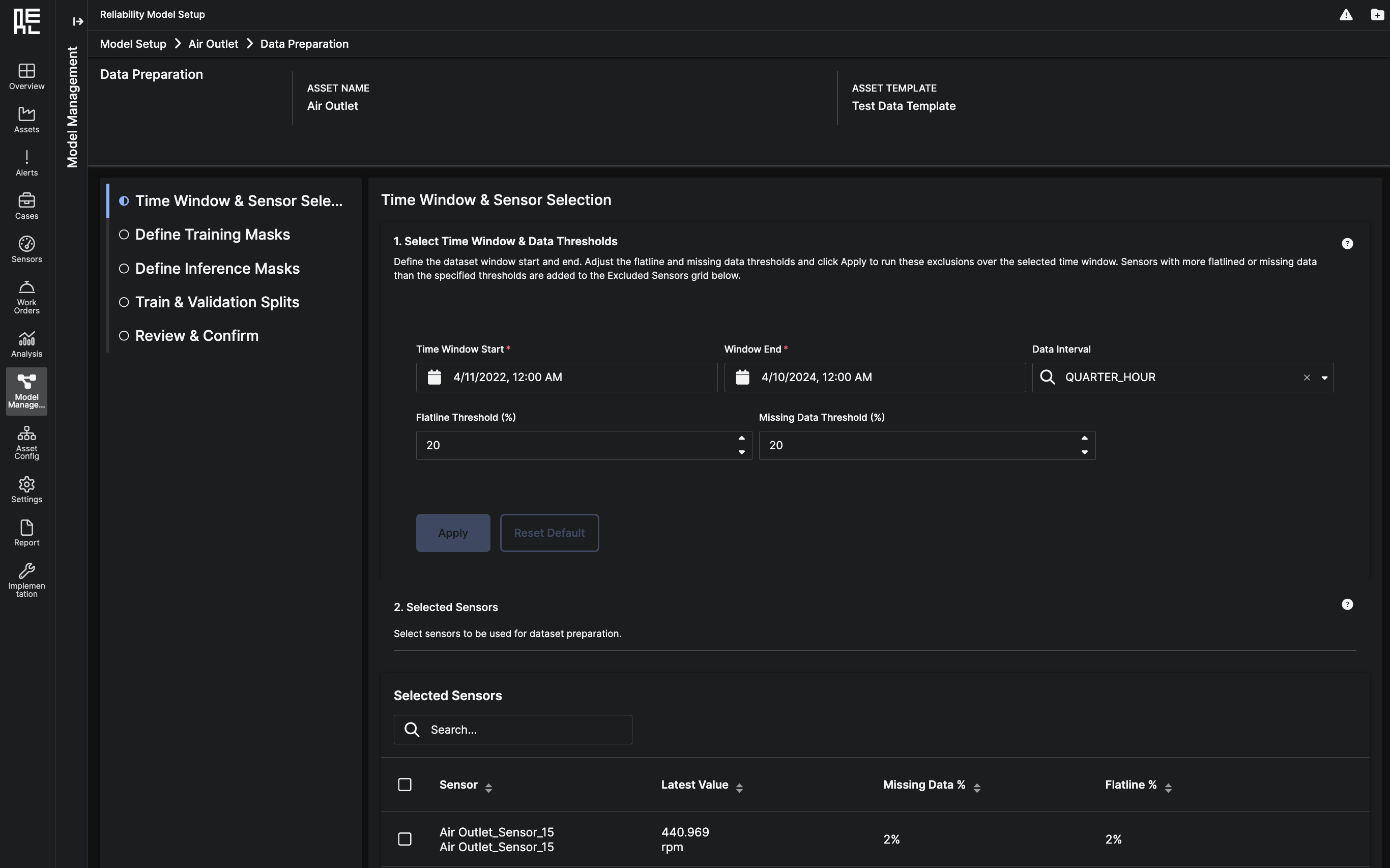

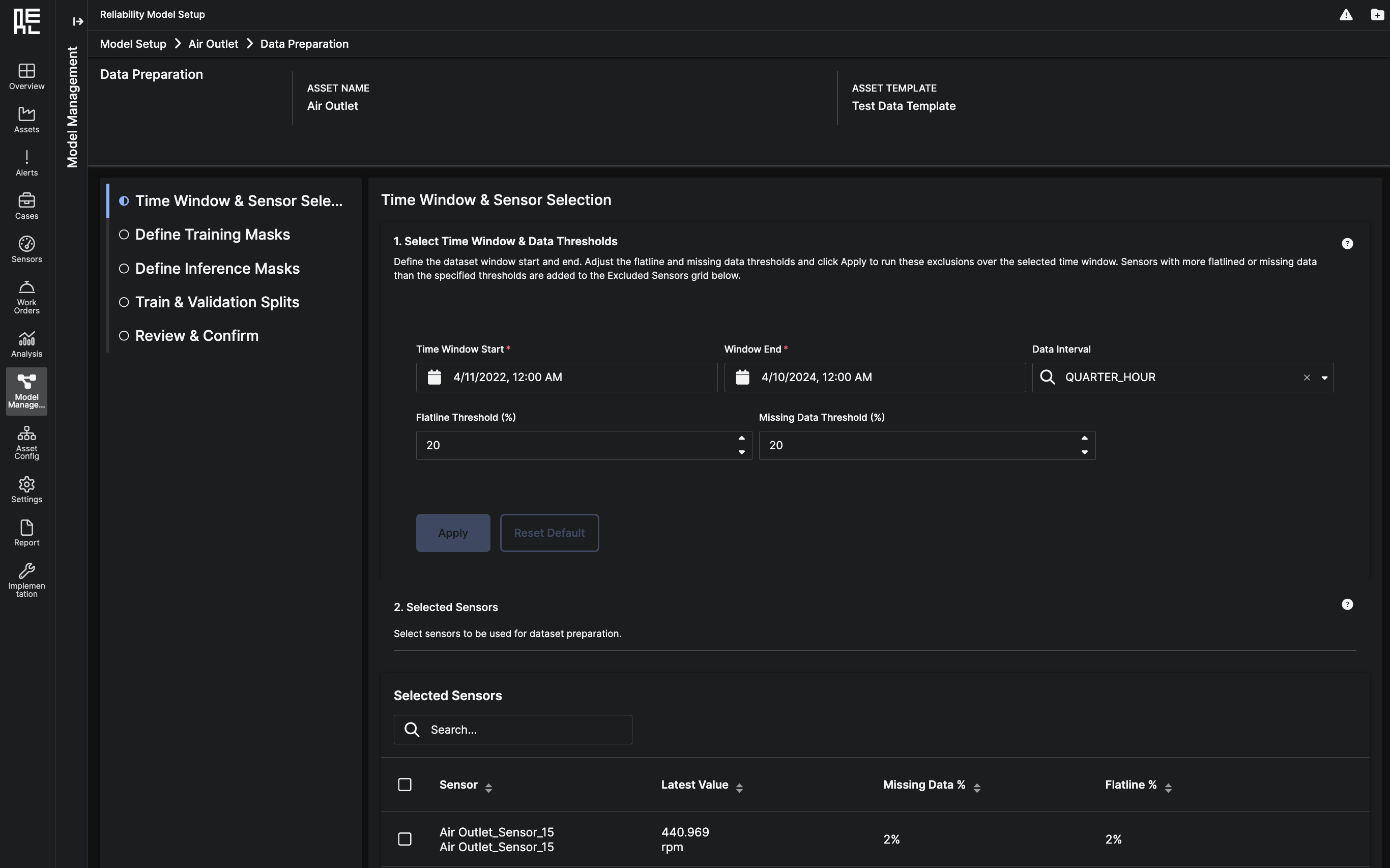

Dataset Preparation

The Dataset Preparation workflow enables users to create new datasets for a specific asset. Using the 5-step workflow, you can select all of the crucial parameters to define a dataset: the time window, the relevant sensors to include, additional sensors from other assets, the training masks, the inference masks, and the train and validation data splits. In the train and validation split, users have the option to choose how to split the data: one method is based on percentage, while the other is based on date selections. Once you complete the workflow, a dataset preparation job will be launched, and you will be able to view the completed dataset on the Model Setup – Asset Details screen.

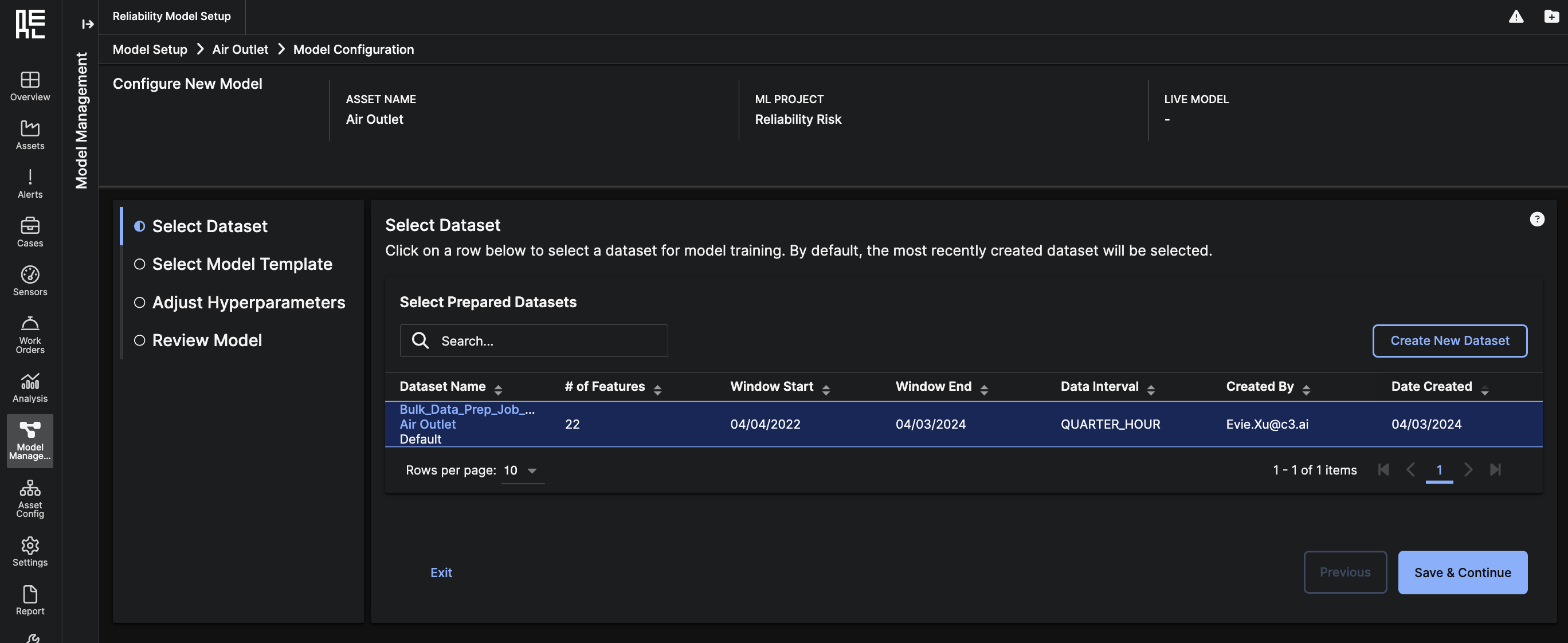

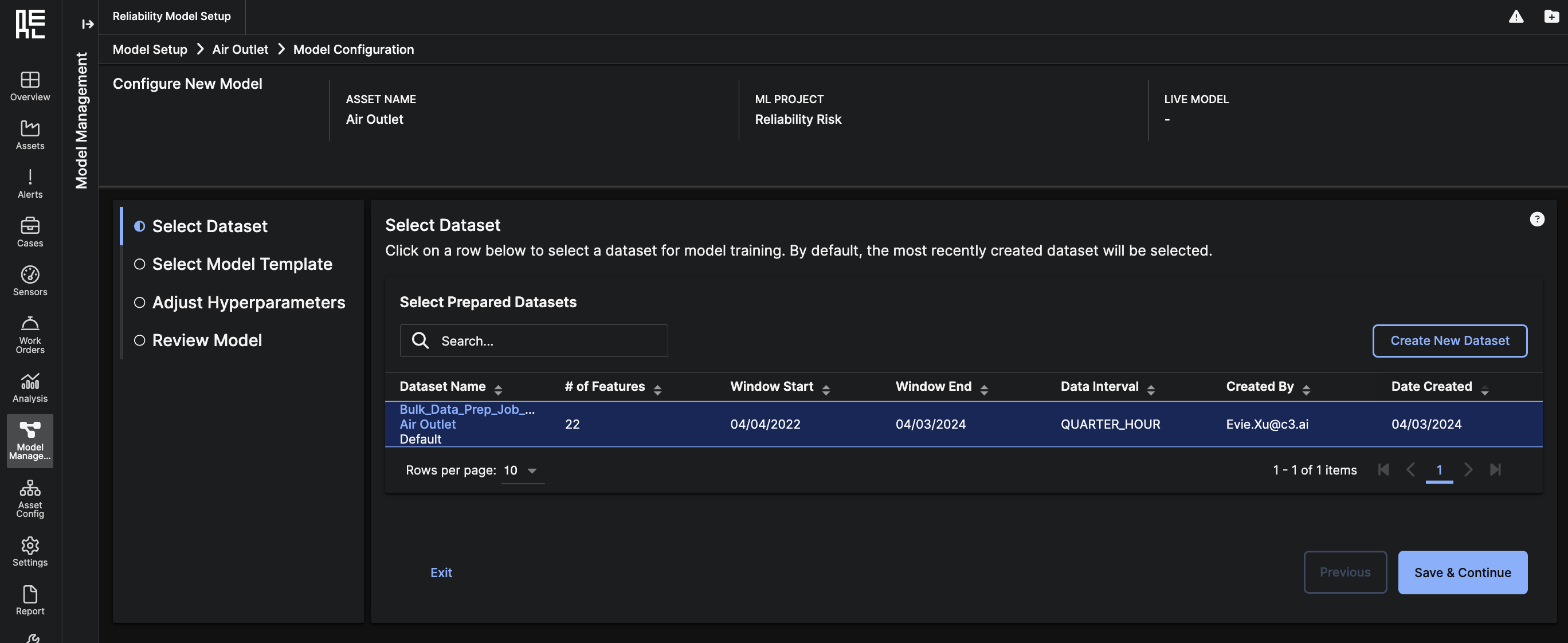

Model Configuration

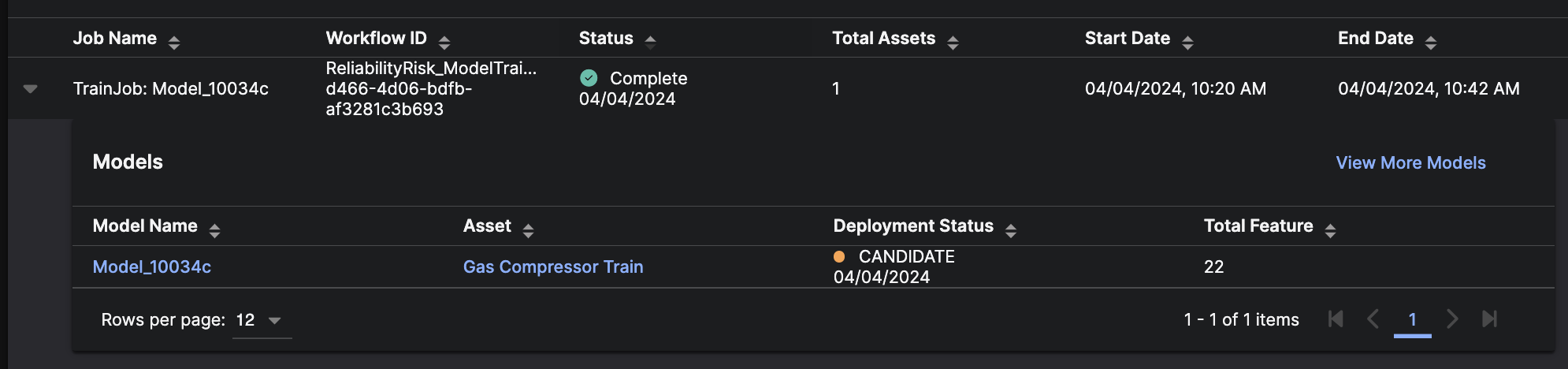

The Model Configuration workflow is the last step to creating, training, and deploying an ML model. This workflow offers users two potential paths: launch and train a model using the default configuration, or edit the model configuration using a 4-step workflow. Editing the model configuration and entering the full workflow provides users with the options to select a different dataset, select a model template, and to adjust hyperparameters for the chosen model template before training the model. Upon completion of the workflow, a model configuration job will be launched to train the model. Once trained, the model will automatically be deployed as aCandidate model, and users will be able to view the model on both the Model Ops page as well as in the Model Setup - Asset Details screen.

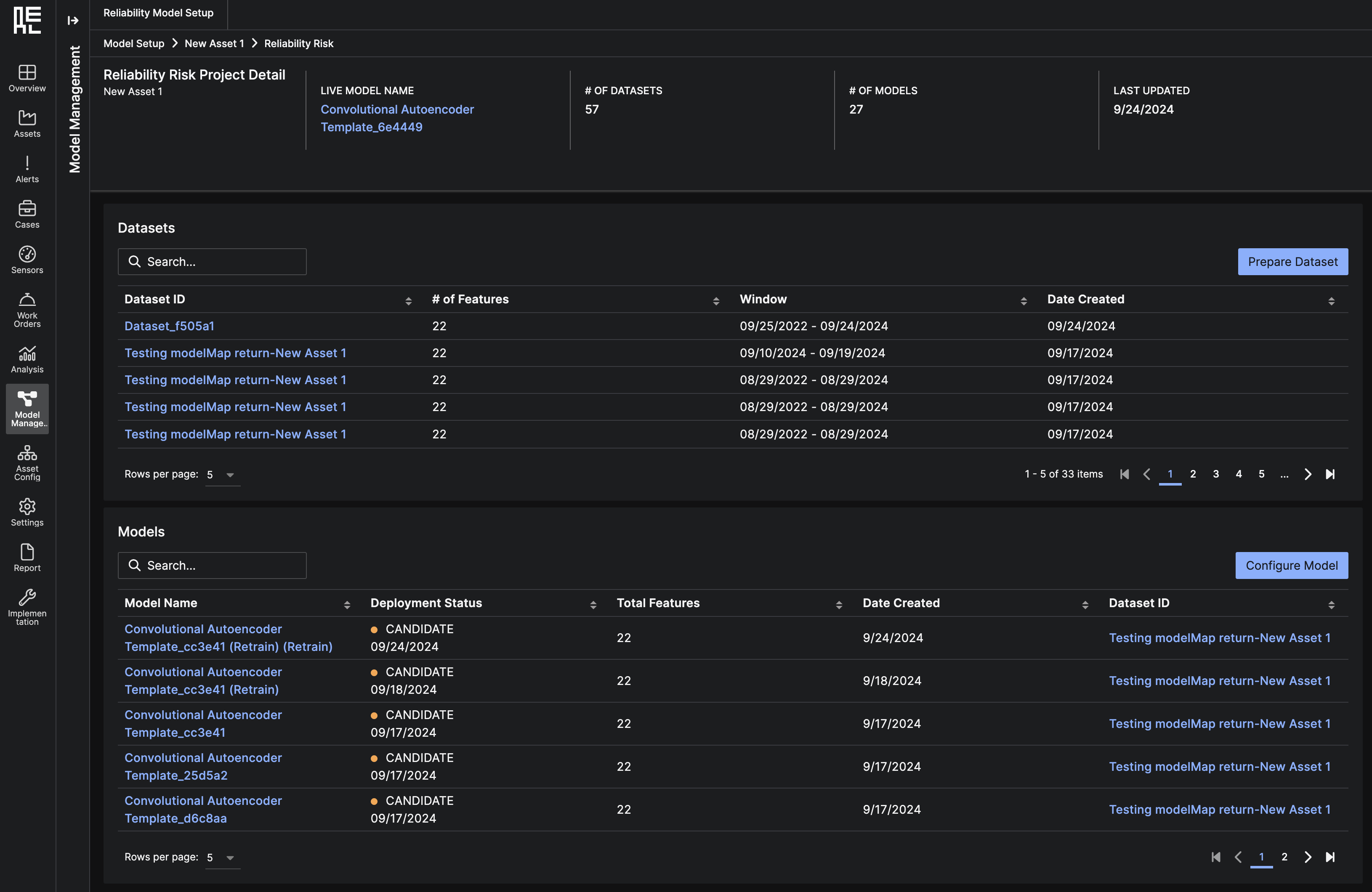

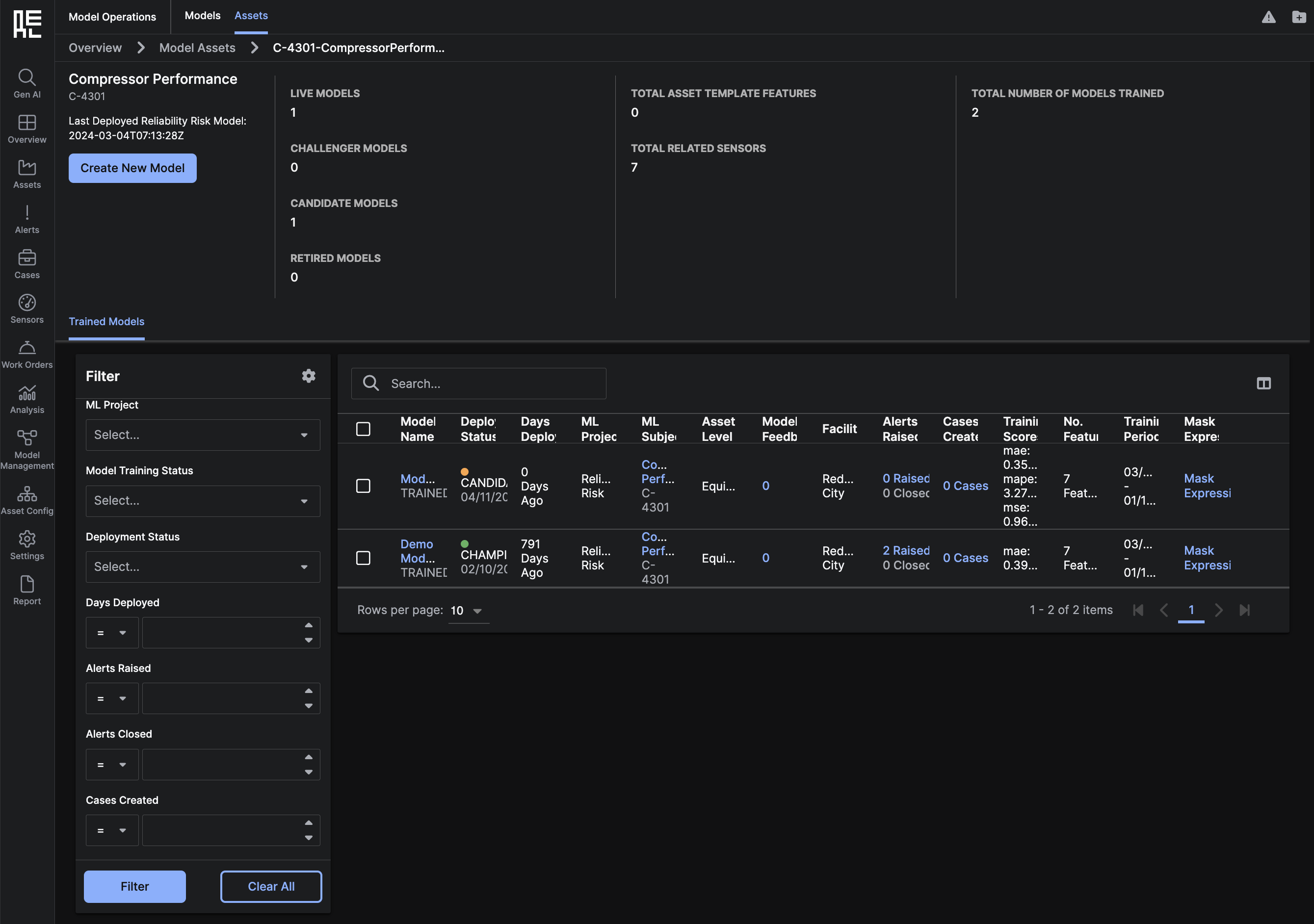

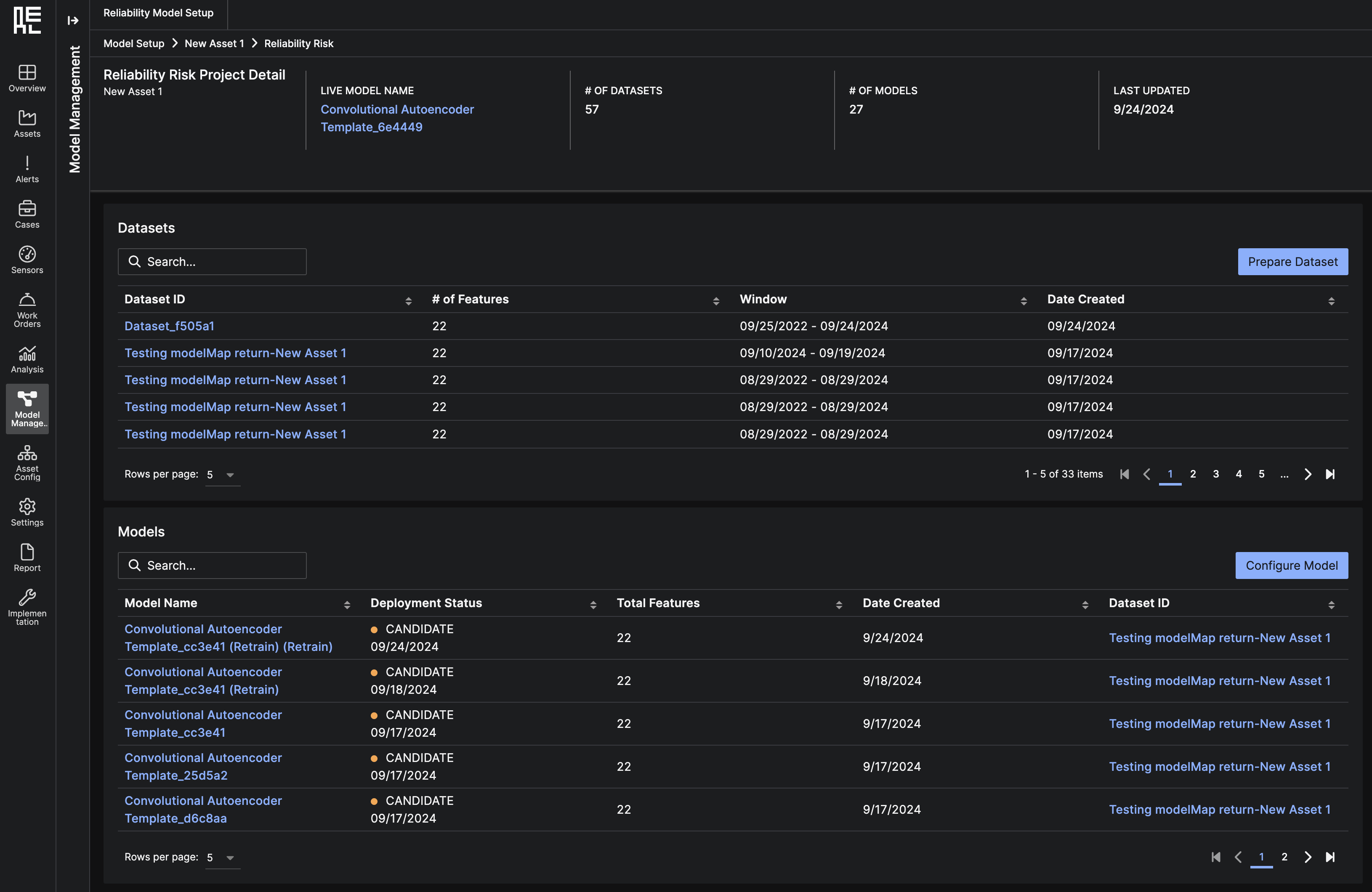

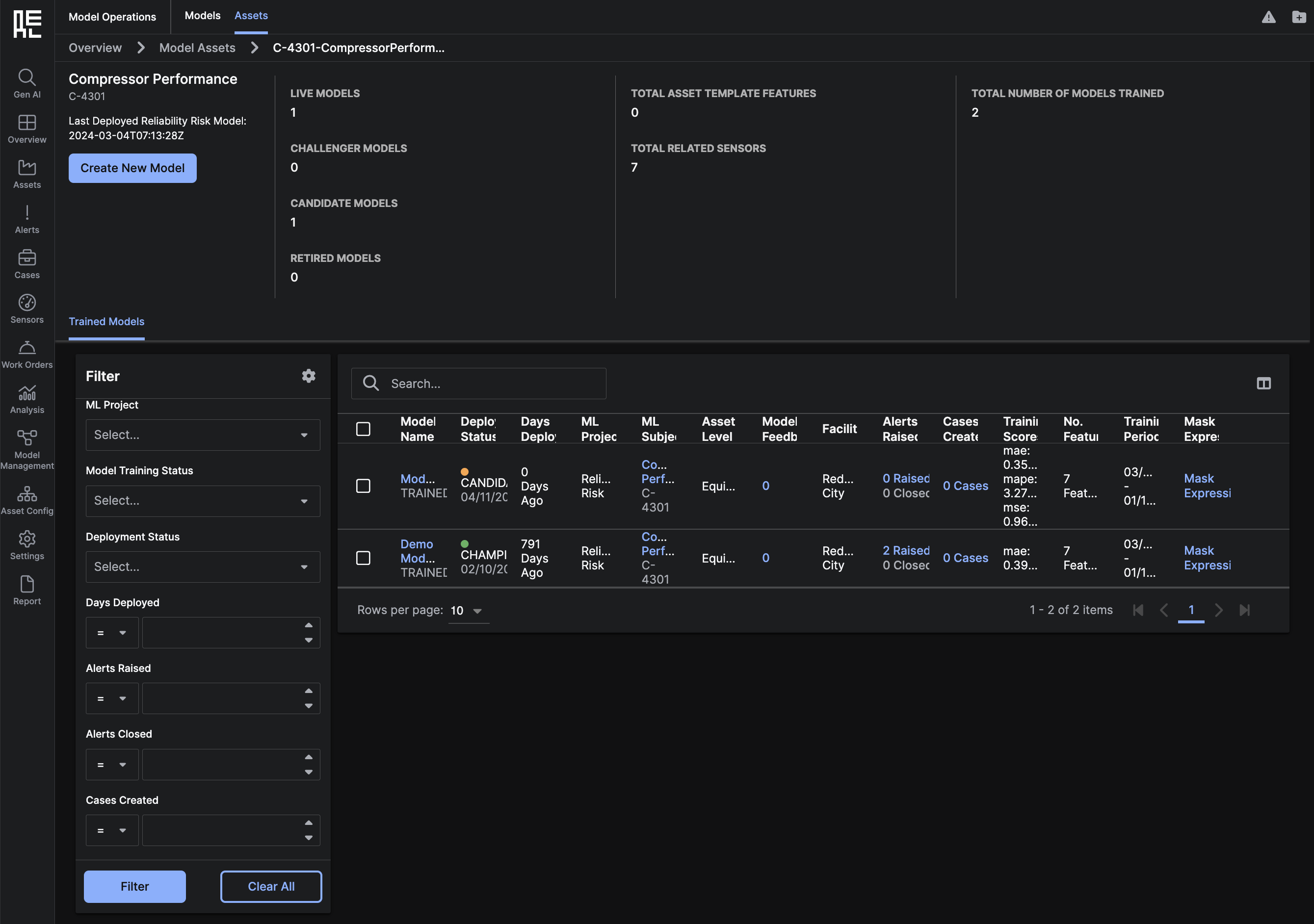

Project Detail Page

The Project Detail page provides an overview of all the model setup activities that are related to this ML Project Type. You can view the datasets and models that exist for this Project type. From this page, you can also begin the Dataset Preparation workflow by clickingPrepare Dataset or begin the Model Configuration workflow by clicking Configure Model.

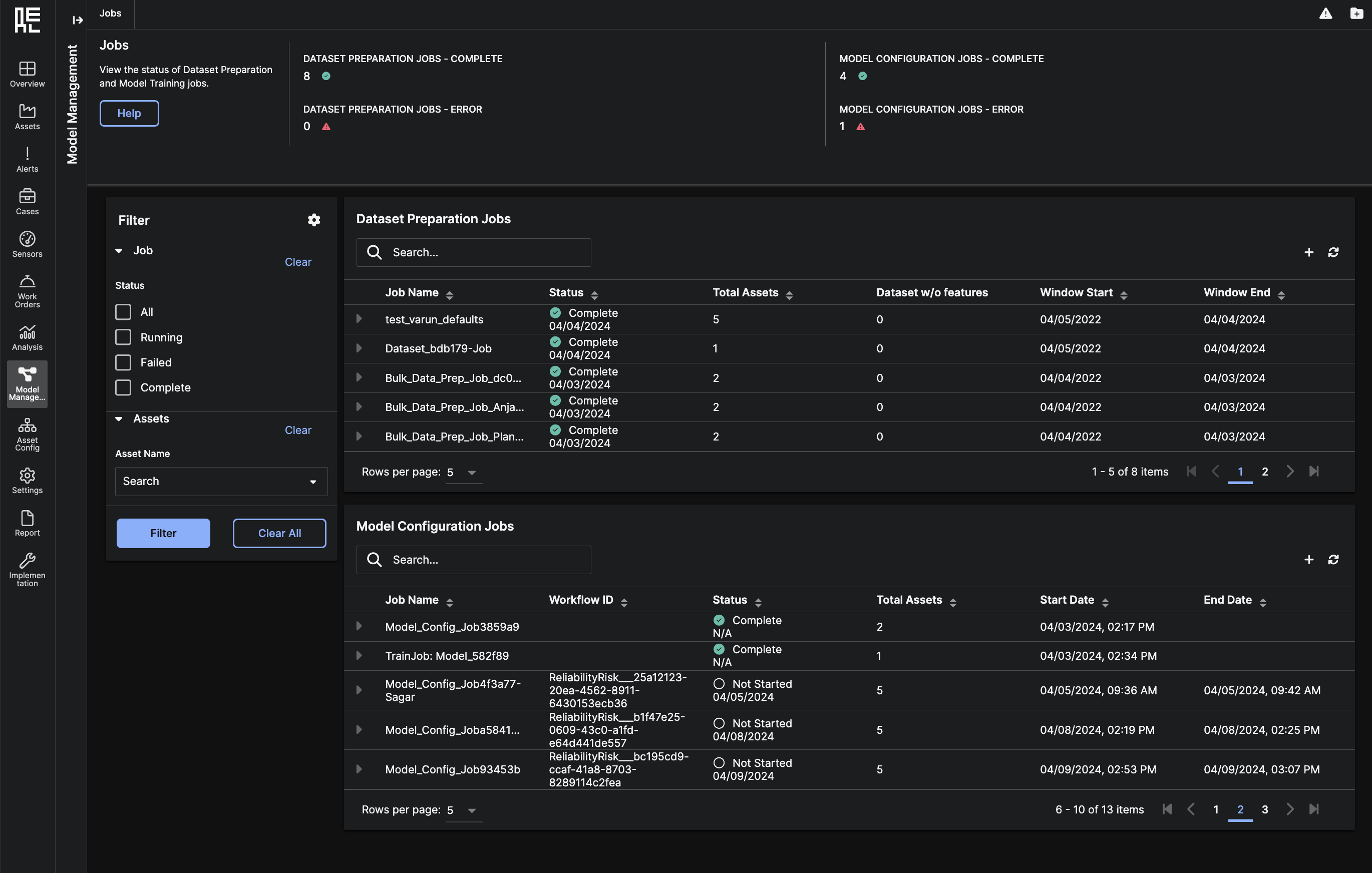

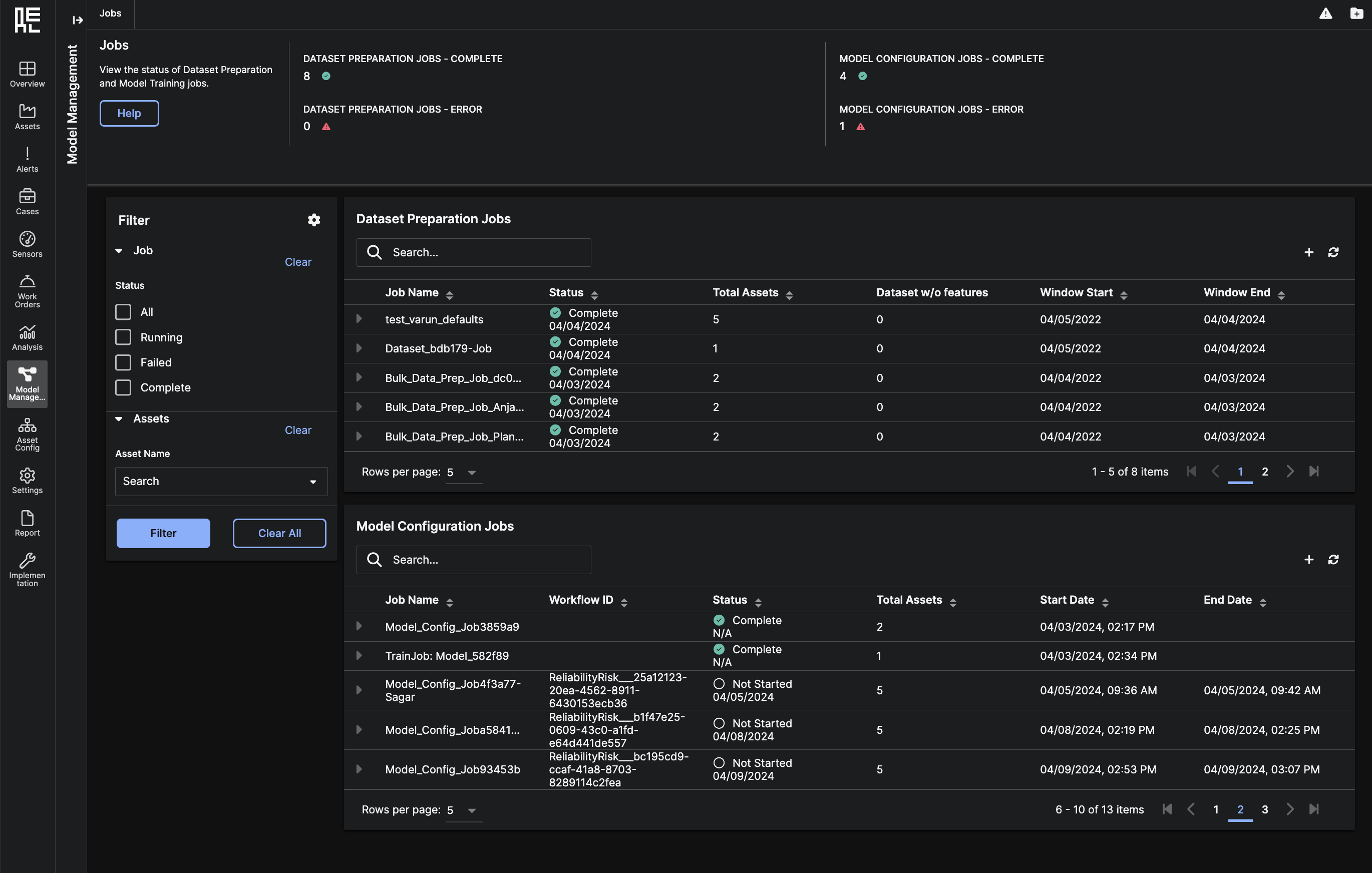

Jobs Sub-Page

The Jobs page showcases the statuses of Dataset Preparation and Model Configuration jobs. After configuring datasets or ML models using the application workflows, this page is a resource for monitoring the progress of the backend jobs. At the top of the page, there are summaries of the following:- Dataset Preparation Jobs - Complete

- Dataset Preparation Jobs - Error

- Model Configuration Jobs - Complete

- Model Configuration Jobs - Error

- For completed jobs, you can view the datasets or ML models in detail by expanding the item in the grid.

- For failed jobs, you can view a Inline Notification that describes the source error of the failed job, if known; For non user-readable internal errors, such as compiling and memory issues, a default error message will be shown. (Note: In the Model Configuration Jobs grid, a job can fail in post creation/training workflows, such as validation, and still upsert the models themselves in the app, in those cases, both the Error Inline Notification and the nested grid will become available to the user.)

The Dataset Preparation Jobs grid shows all the Dataset Preparation jobs for the deployment. Each row in the grid can be expanded to show the one or more prepared datasets that are part of the job. From a set of completed datasets, you can:

The Dataset Preparation Jobs grid shows all the Dataset Preparation jobs for the deployment. Each row in the grid can be expanded to show the one or more prepared datasets that are part of the job. From a set of completed datasets, you can:

- Click on the Dataset ID to view the Dataset Summary page

- Click on the Asset to view the Model Setup Asset Detail page

- Use the checkboxes to select one or more datasets, then use the table action to begin Bulk Model Configuration

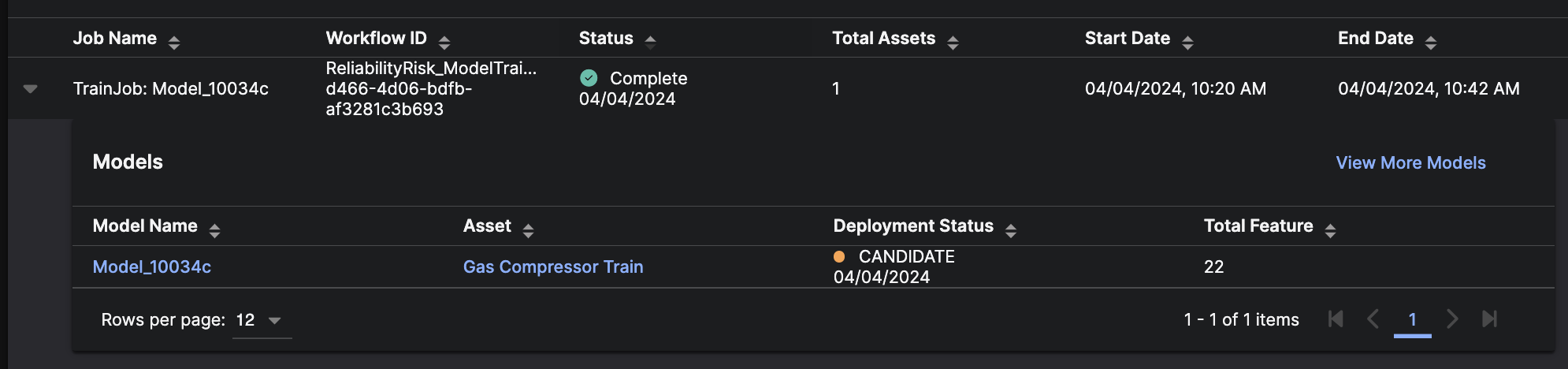

The Model Configuration Jobs grid shows all the Model Configuration jobs for the deployment. Each row in the grid can be expanded to show the one or more Trained Models that are part of the job.

The Model Configuration Jobs grid shows all the Model Configuration jobs for the deployment. Each row in the grid can be expanded to show the one or more Trained Models that are part of the job.

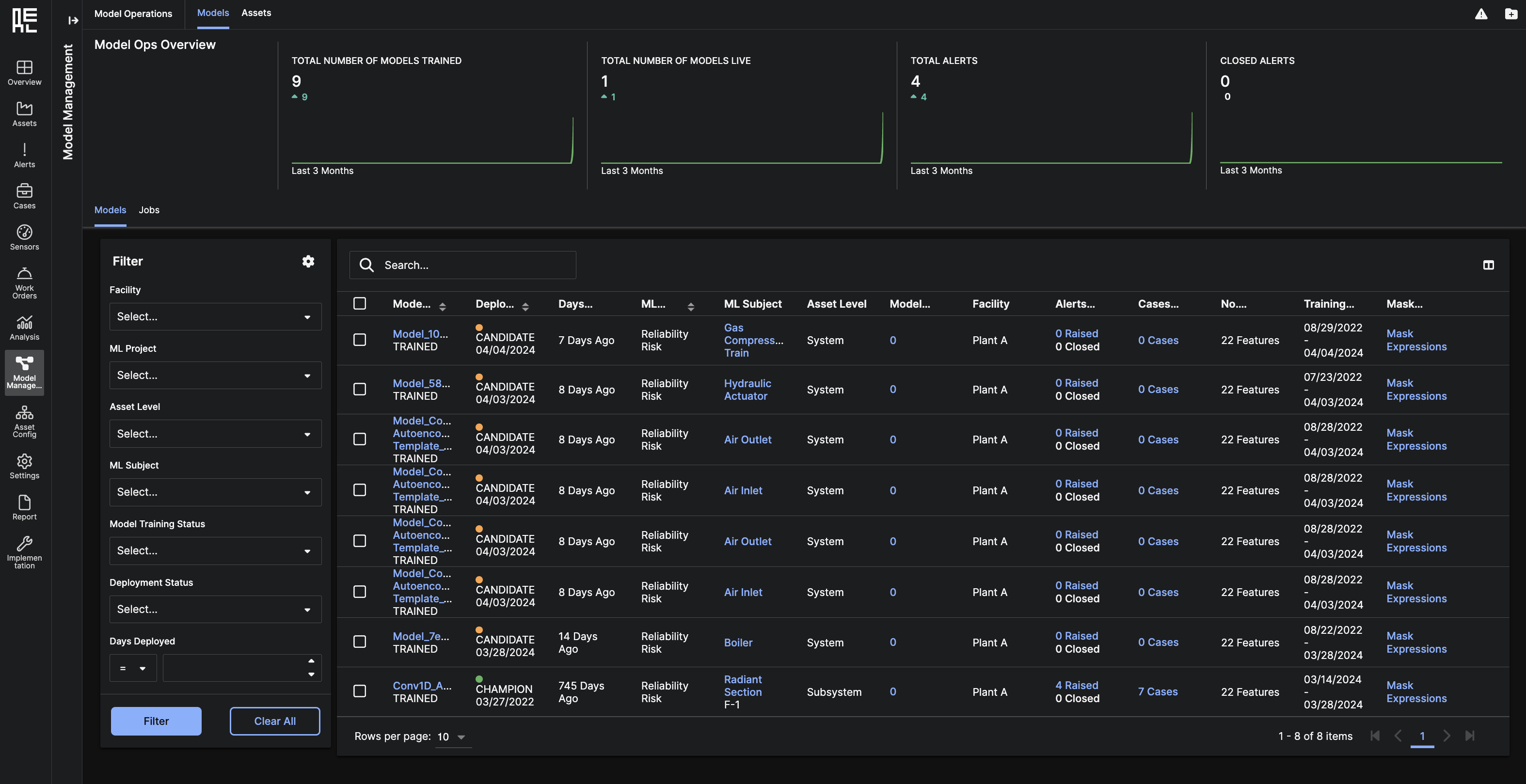

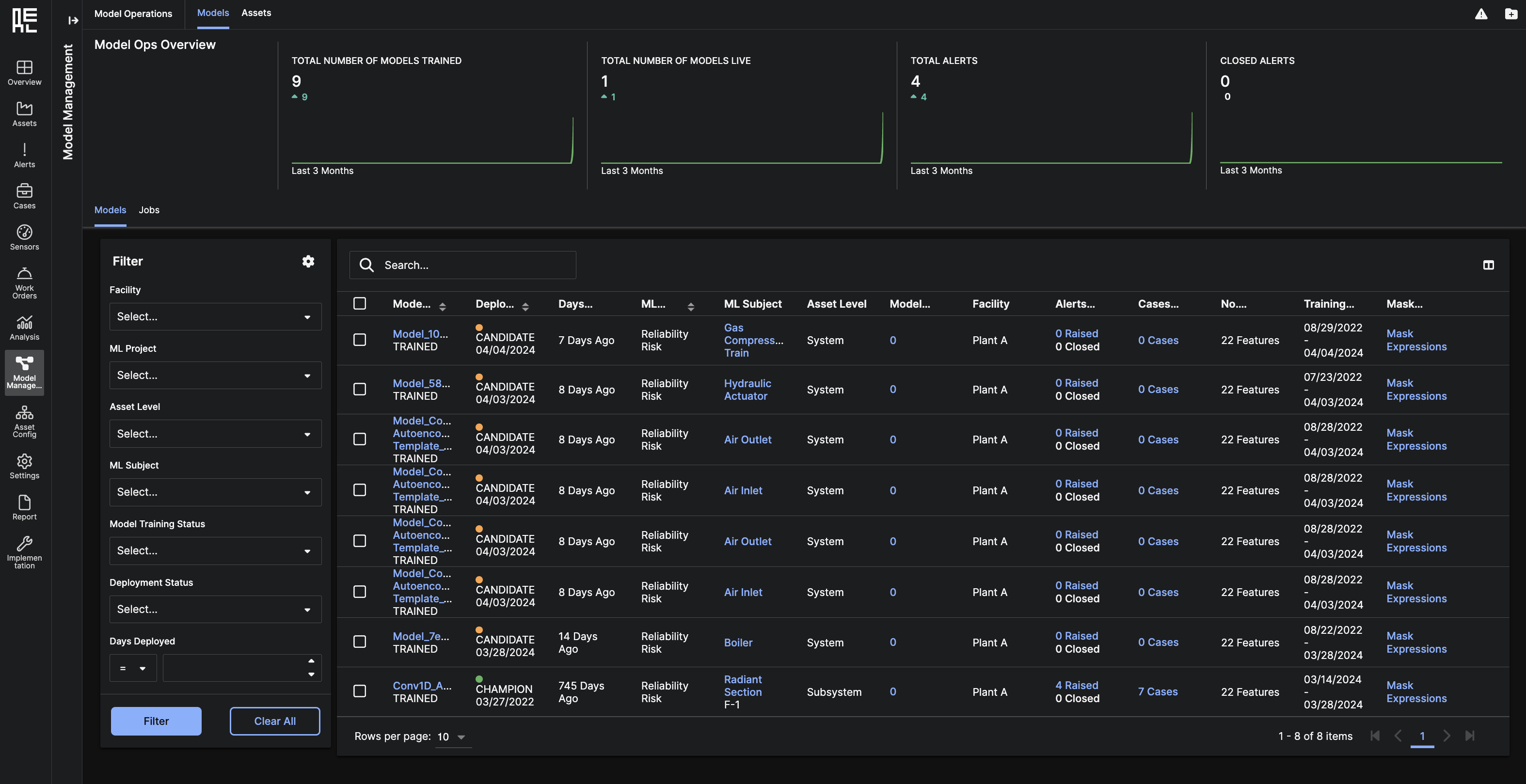

Model Ops Sub-Page

The Model Operations Page allows you to review the performance of yourAsset Models.

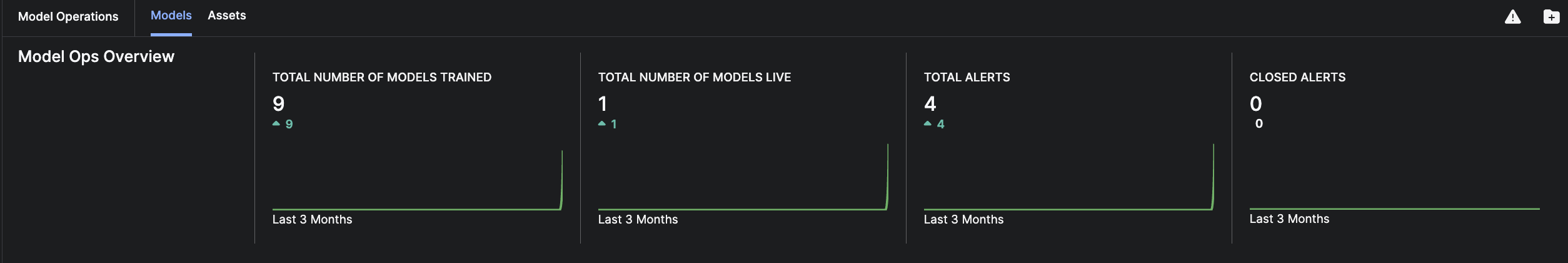

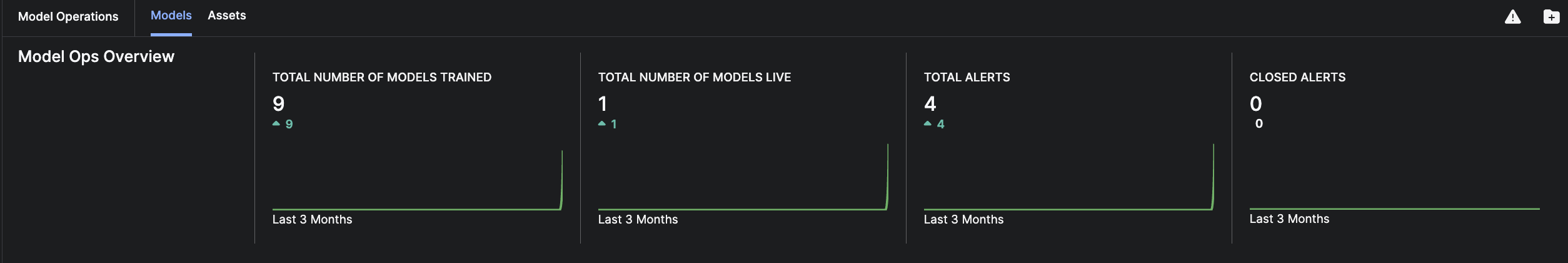

Models Tab

At the top of the Models tab, there are roll-up summaries over the past 3 months of the following four metrics:- Number of models trained

- Number of models live

- Total alerts

- Closed alerts

Below, you can see a grid table of all

Below, you can see a grid table of all Models that are deployed in the application. From the table, you can:

- Search for specific

Modelsusing keywords. - Change the columns of data that are visible on the table.

- Retrain a

Modelby specifying a new training period. - Change the deployments status of the

Modelto Champion, Candidate, Retired, or Challenger. - Click on the

Model Nameto redirect to the Model Details Page. - Click on

ML Subjectto redirect to the Asset Models Details Page. - Click on

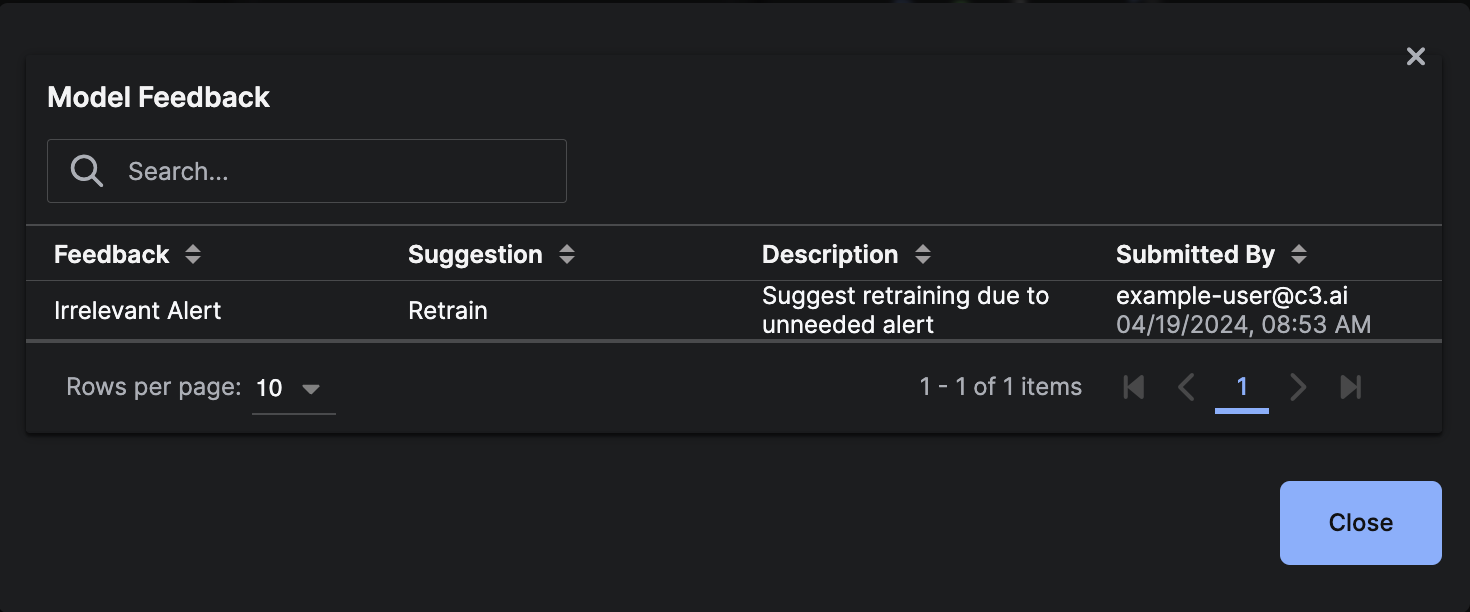

Model Feedbackto show a modal which allows you to search for feedback on theModel.

- Click on

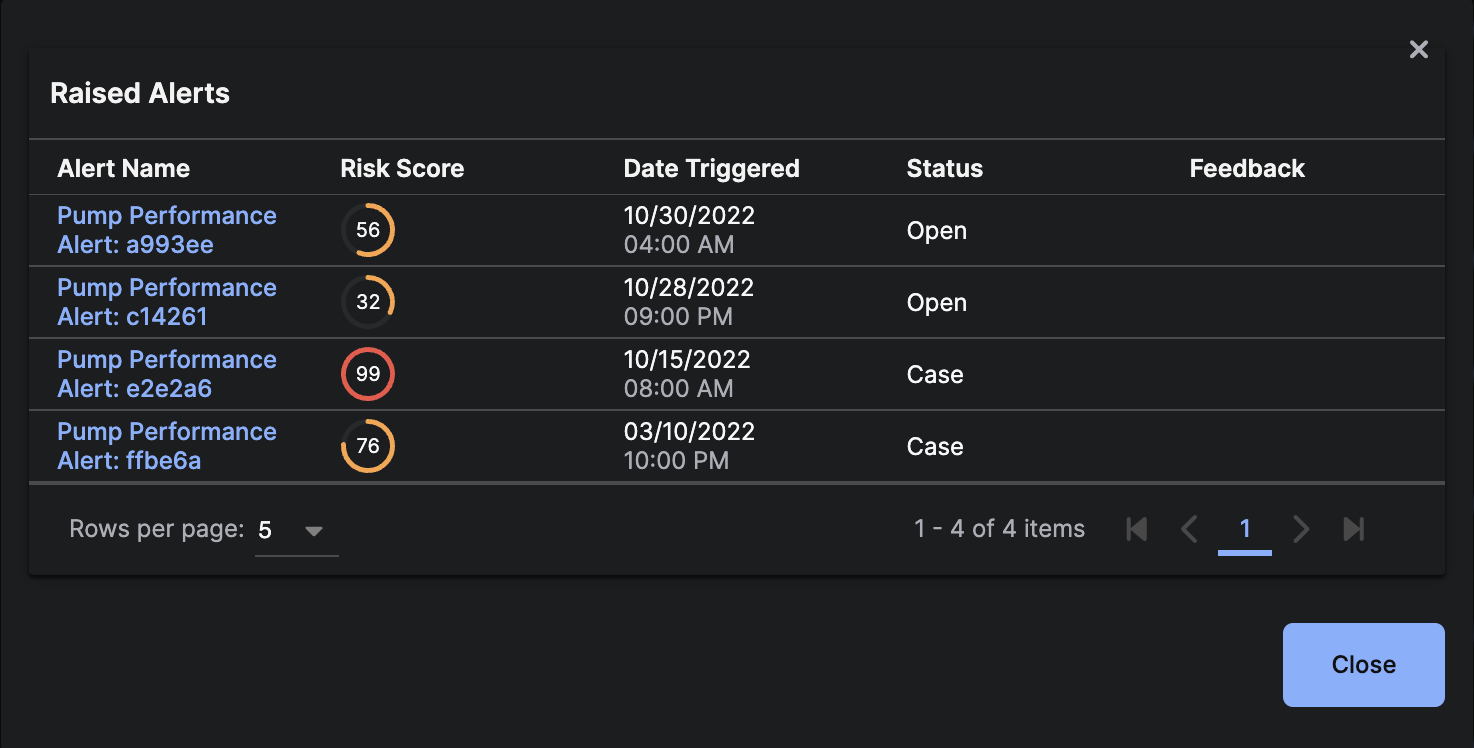

Alerts Raisedto show a modal with details ofAlertsrelated to theModel.

- Click on

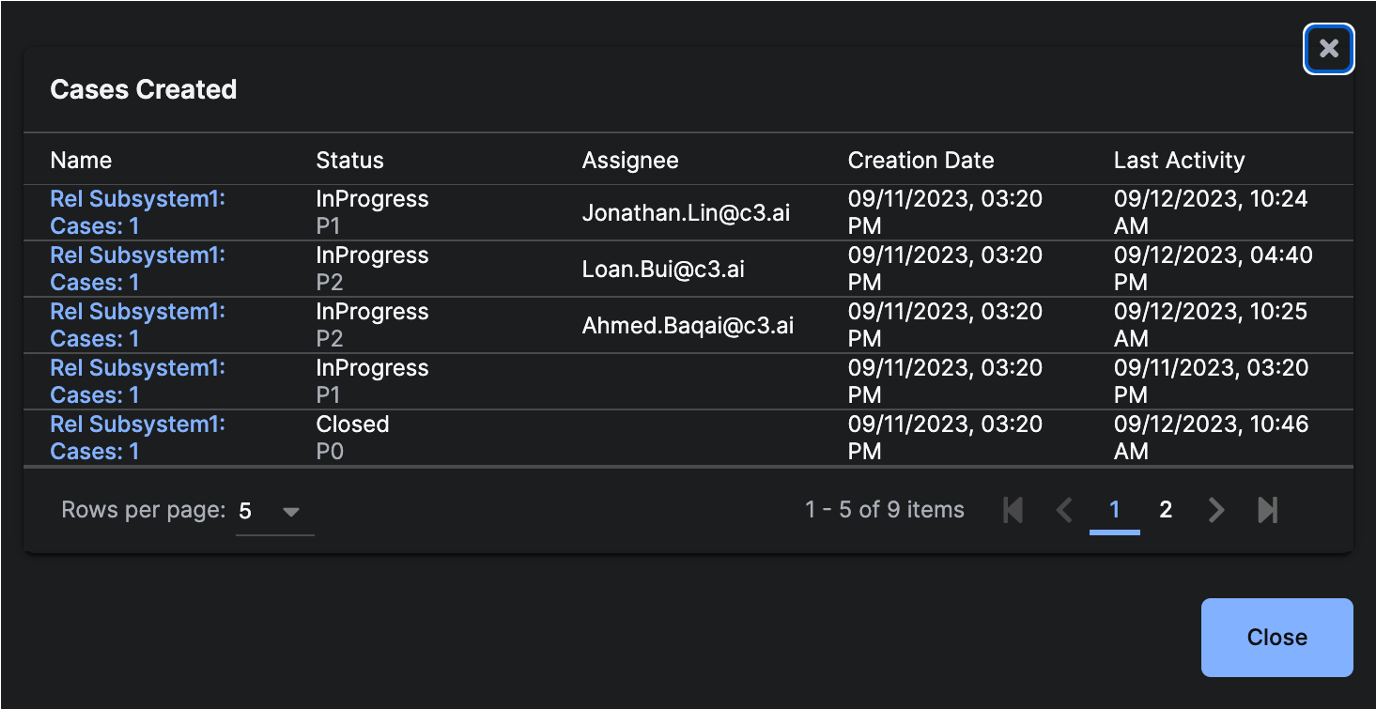

Cases Createdto show a modal with details ofCasesrelated to theModel.

- Click on

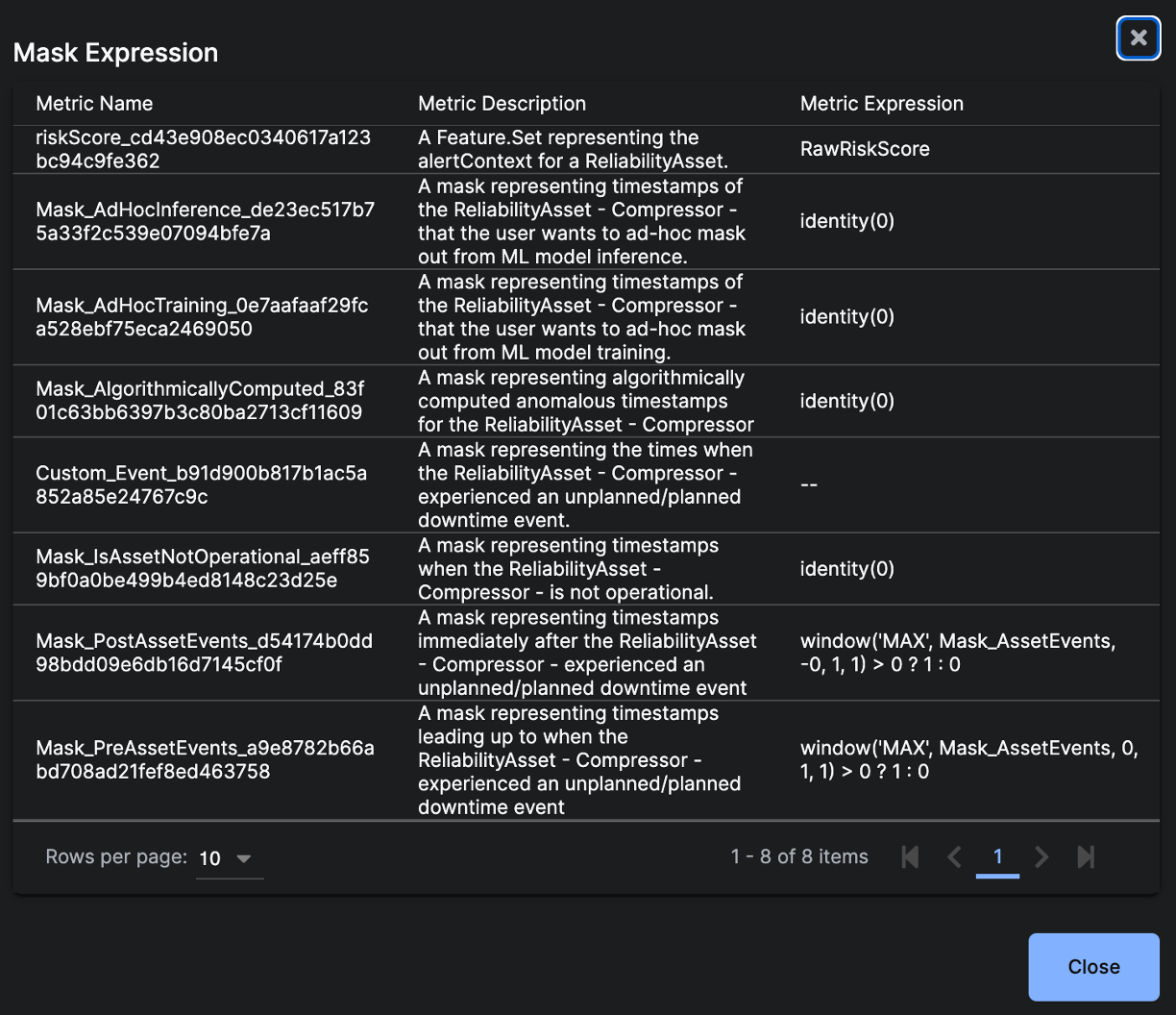

Mask Expressionsto show a modal with details ofMask Expressionsrelated to theModel.

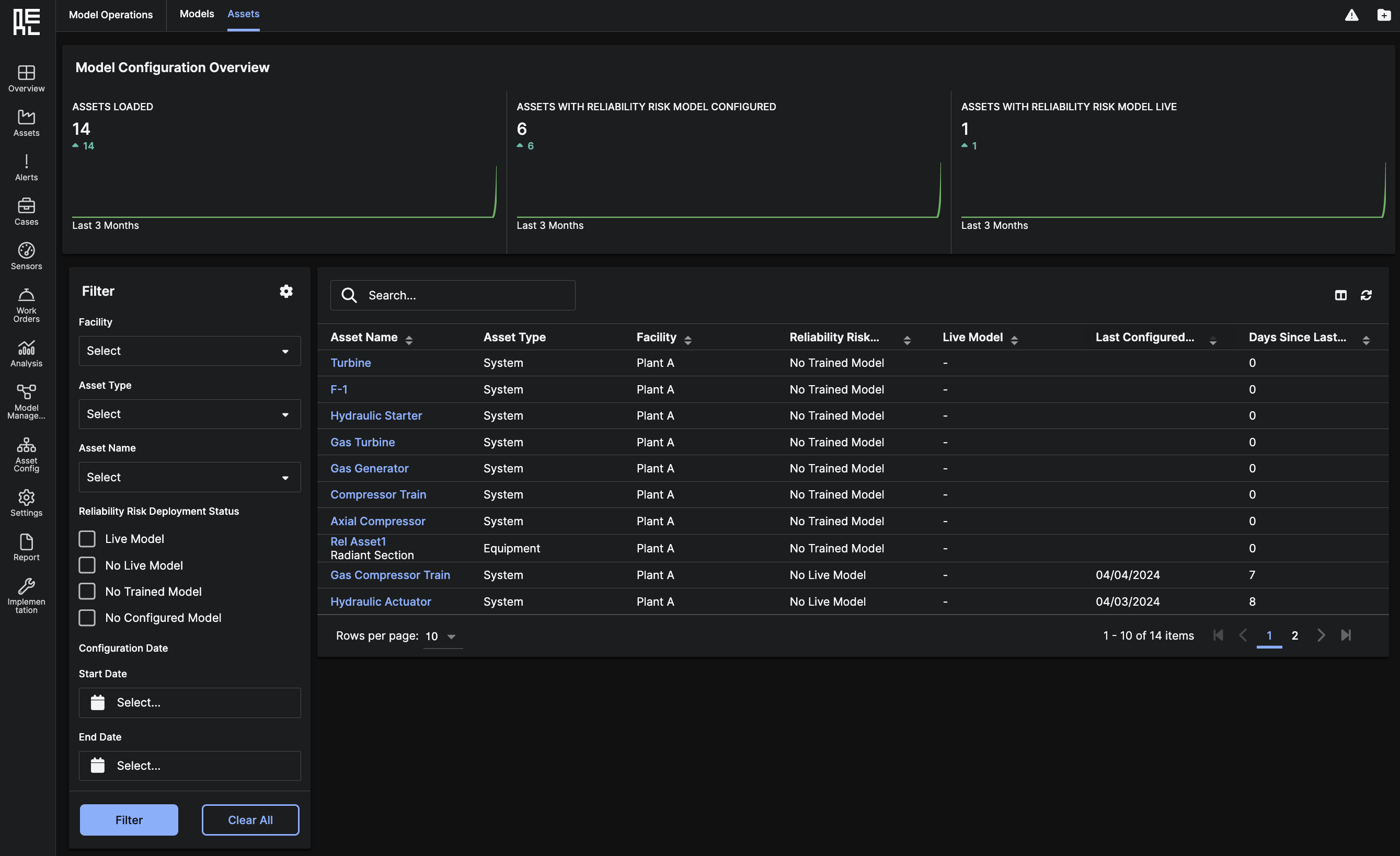

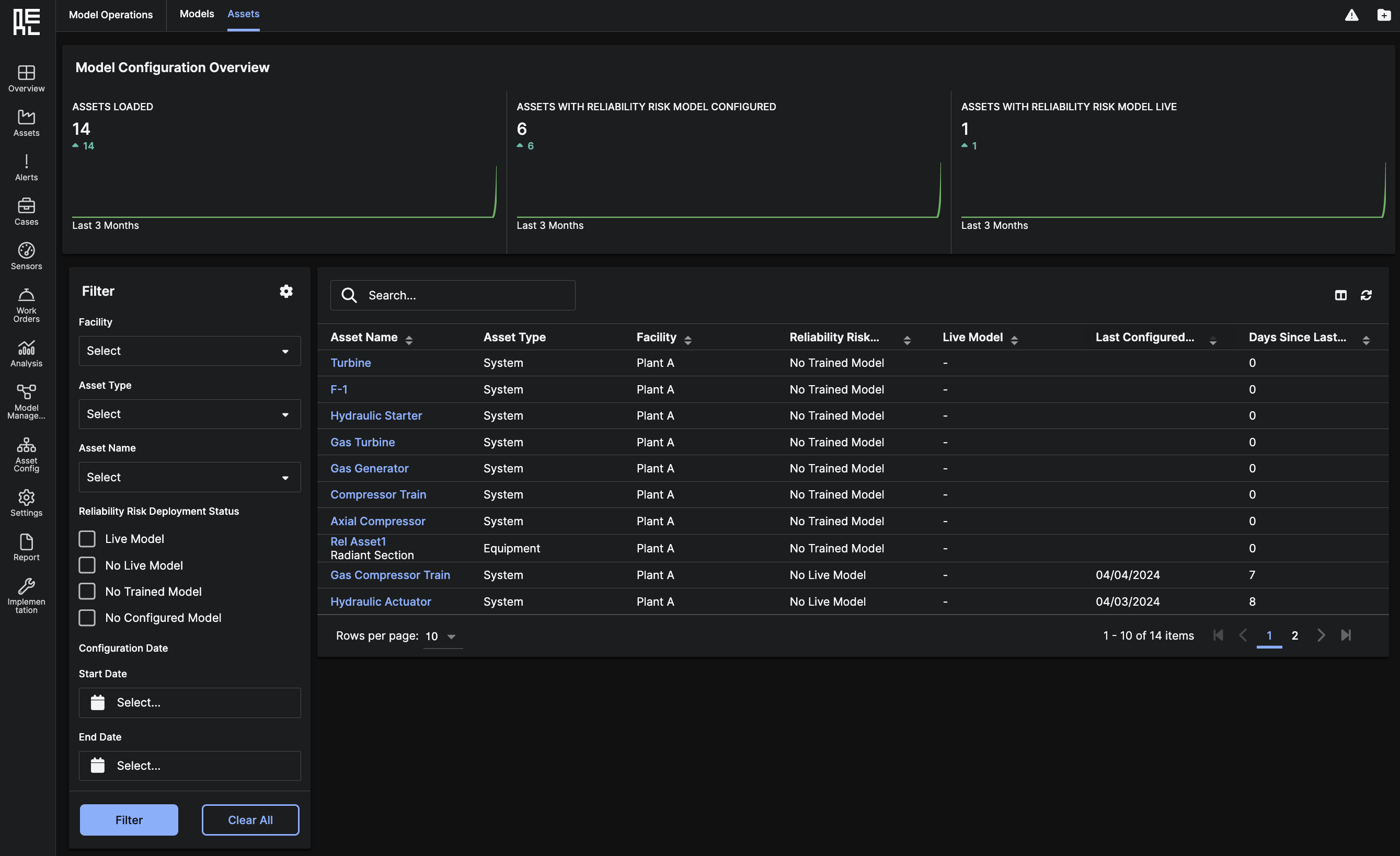

Assets Tab

At the top of the Assets tab, there are roll-up summaries over the past 3 months of the following three metrics:- Assets loaded

- Assets with reliability risk model configured

- Assets with reliability risk model live

Below, you can see a grid table of all

Below, you can see a grid table of all Assets and if they have associated Models.

Clicking on the Asset Name redirects you to the Asset Models Details Page, which shows all of the Models deployed on that Asset.

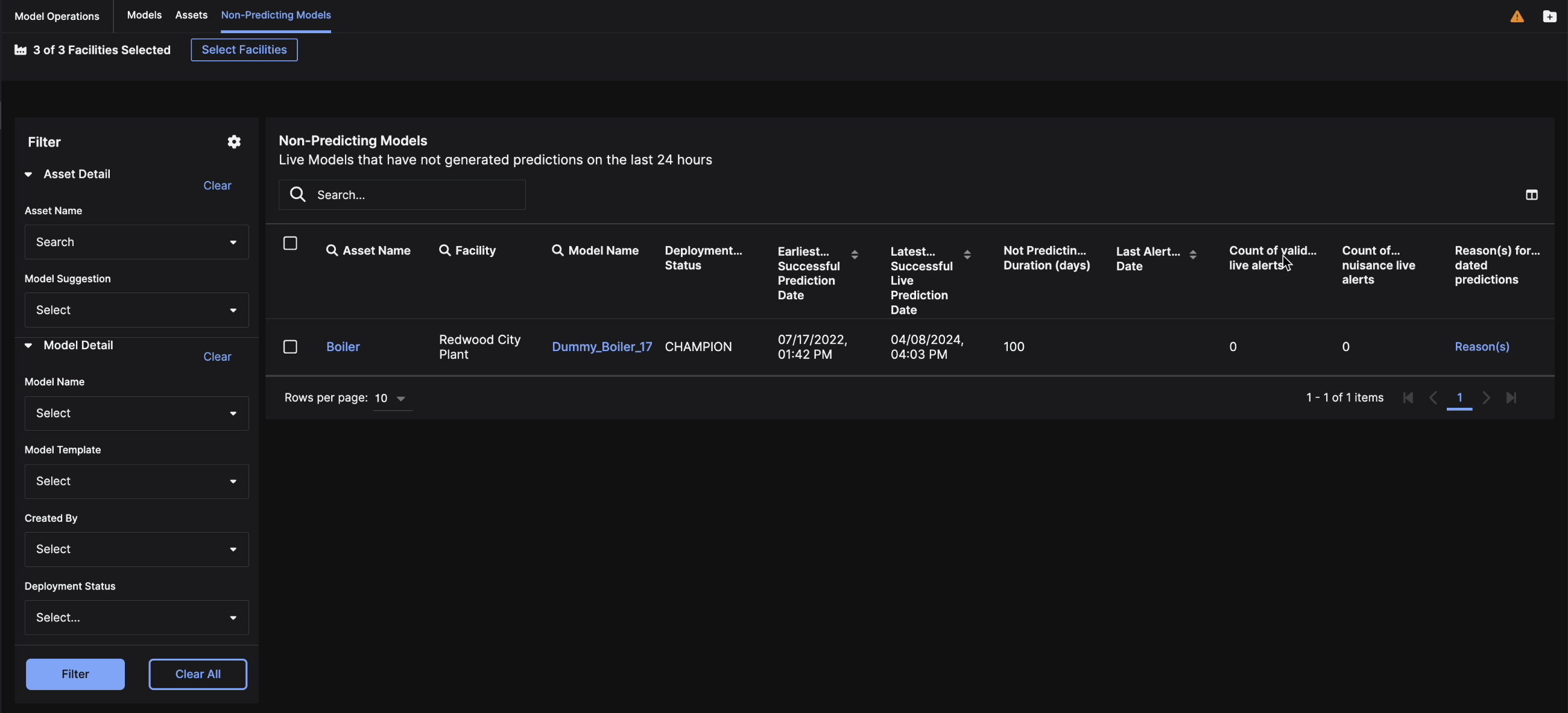

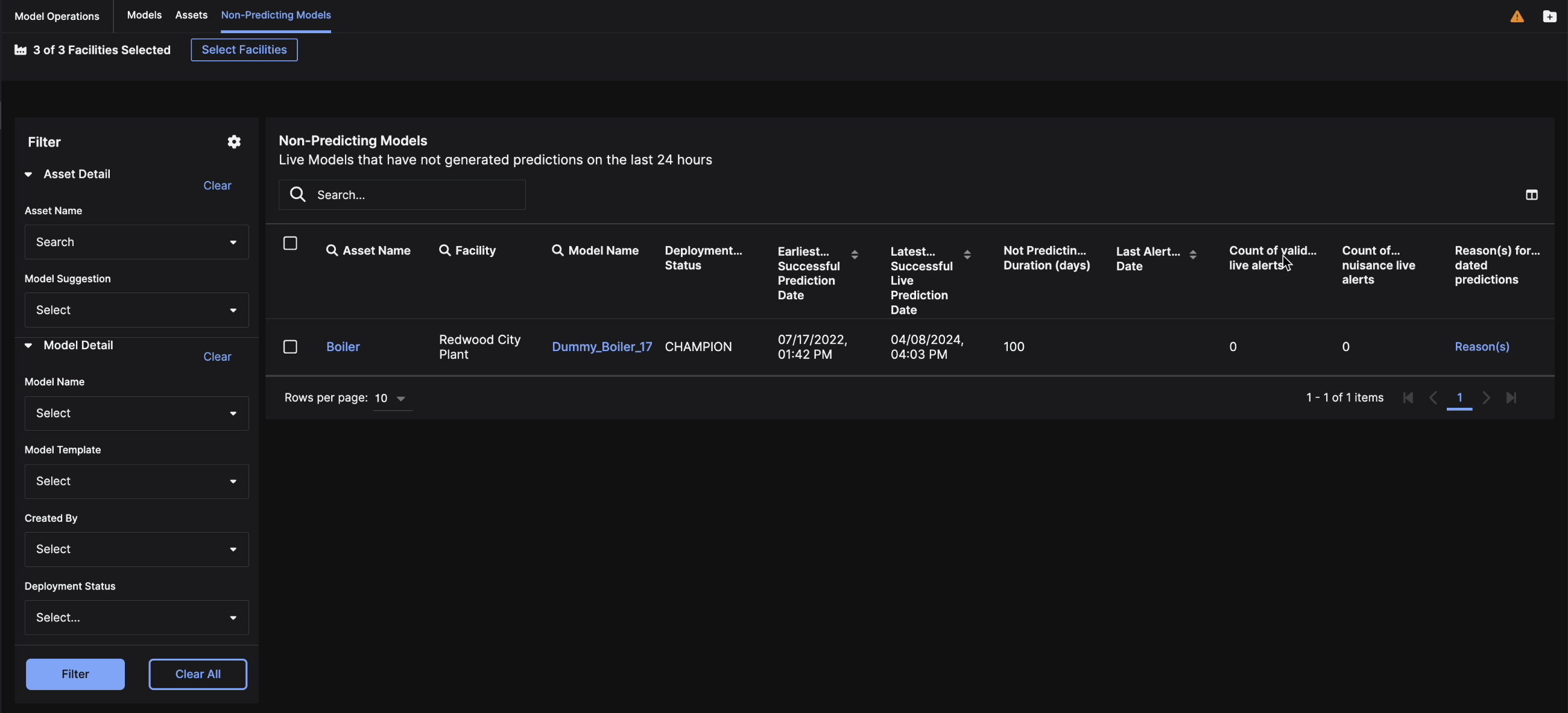

Non-Predicting Models Tab

The third tab on theModel Operations page is the Non-Predicting Models Tab. This page shows all the live models that have not generated predictions in the last 24 hours.

You can use this page to investigate any models that may not be performing normally and have not generated predictions. Click the Reasons hyperlink on the far-right column to view potential reasons for abnormal model performance.

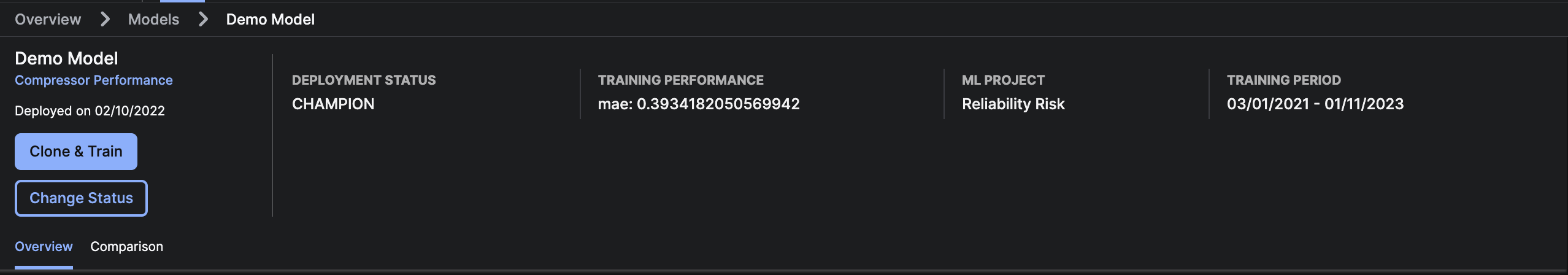

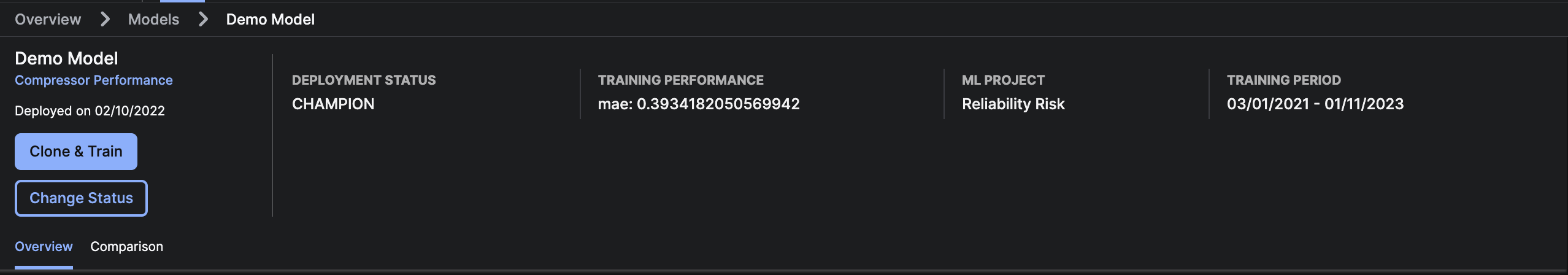

Model Details

The Model Details Page allows you to review theModel’s Risk Score, Feature List, AI Alerts, Model Feedback, and Events on the Asset.

At the top of the page, you can:

- Retrain a

Modelby specifying a new training period. - Change the deployments status of the

Modelto Champion, Candidate, Retired, or Challenger.

Model Overview

The Overview tab consists of:

The Overview tab consists of:

- Prediction Analysis plot to show the

Modeloutput over time. - AI Alerts table showing all

Alertsgenerated from theModeloutput. Clicking on theAlert Namewill redirect to the Alert Details Page. - Model feedback table showing all user submitted feedback on the

Modeloutputs. - Events table showing all events occurring on the

Asset.

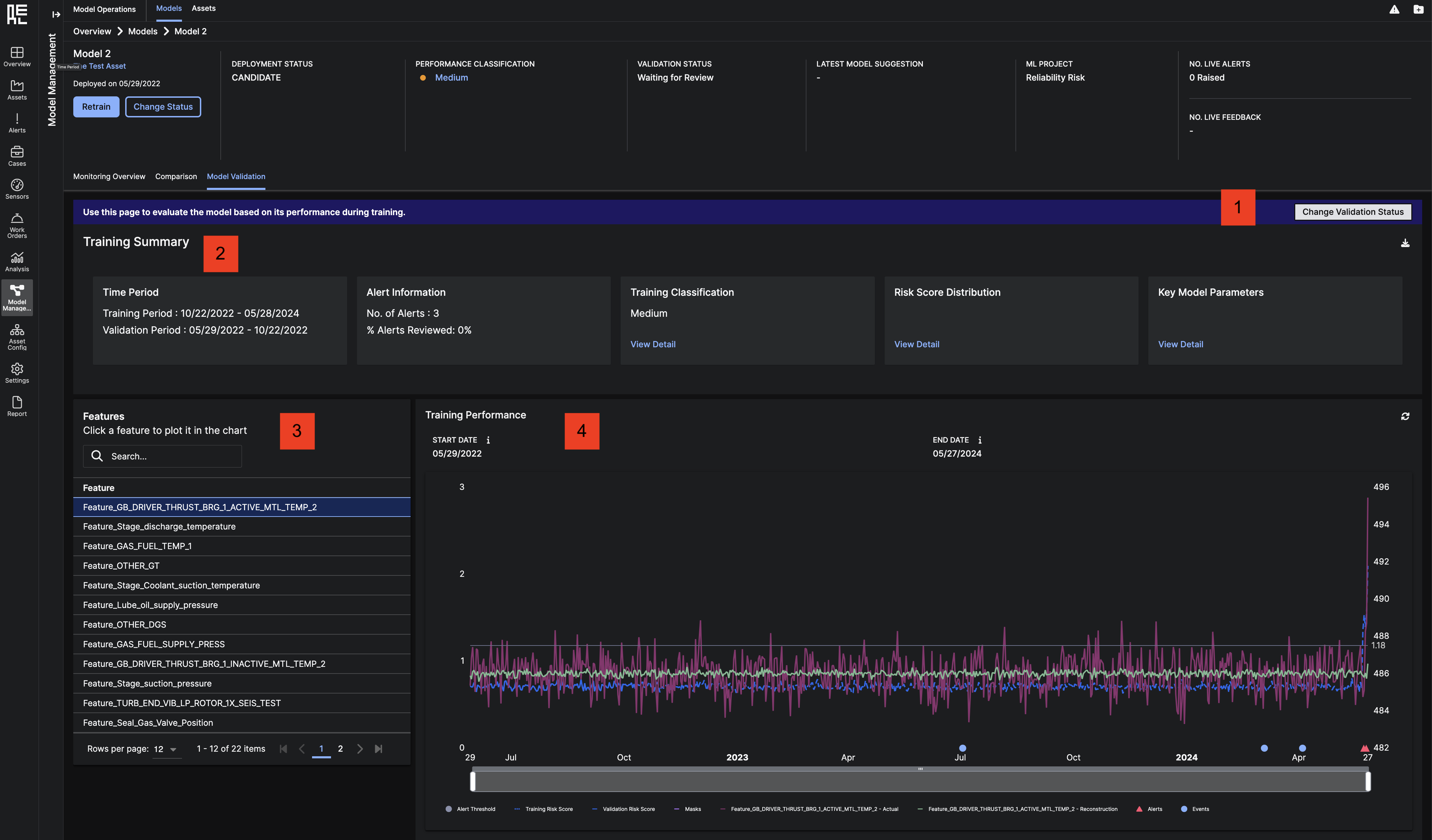

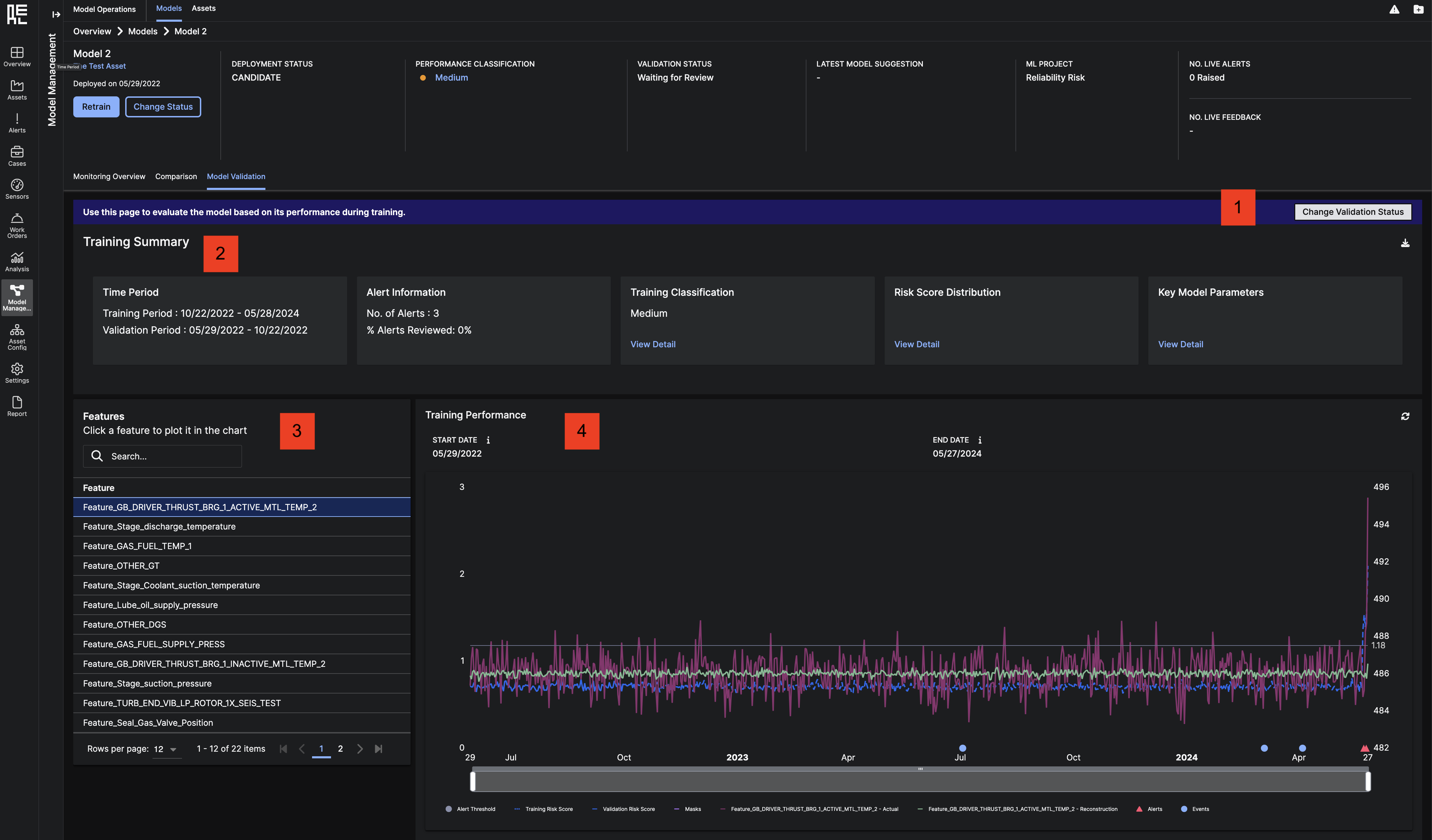

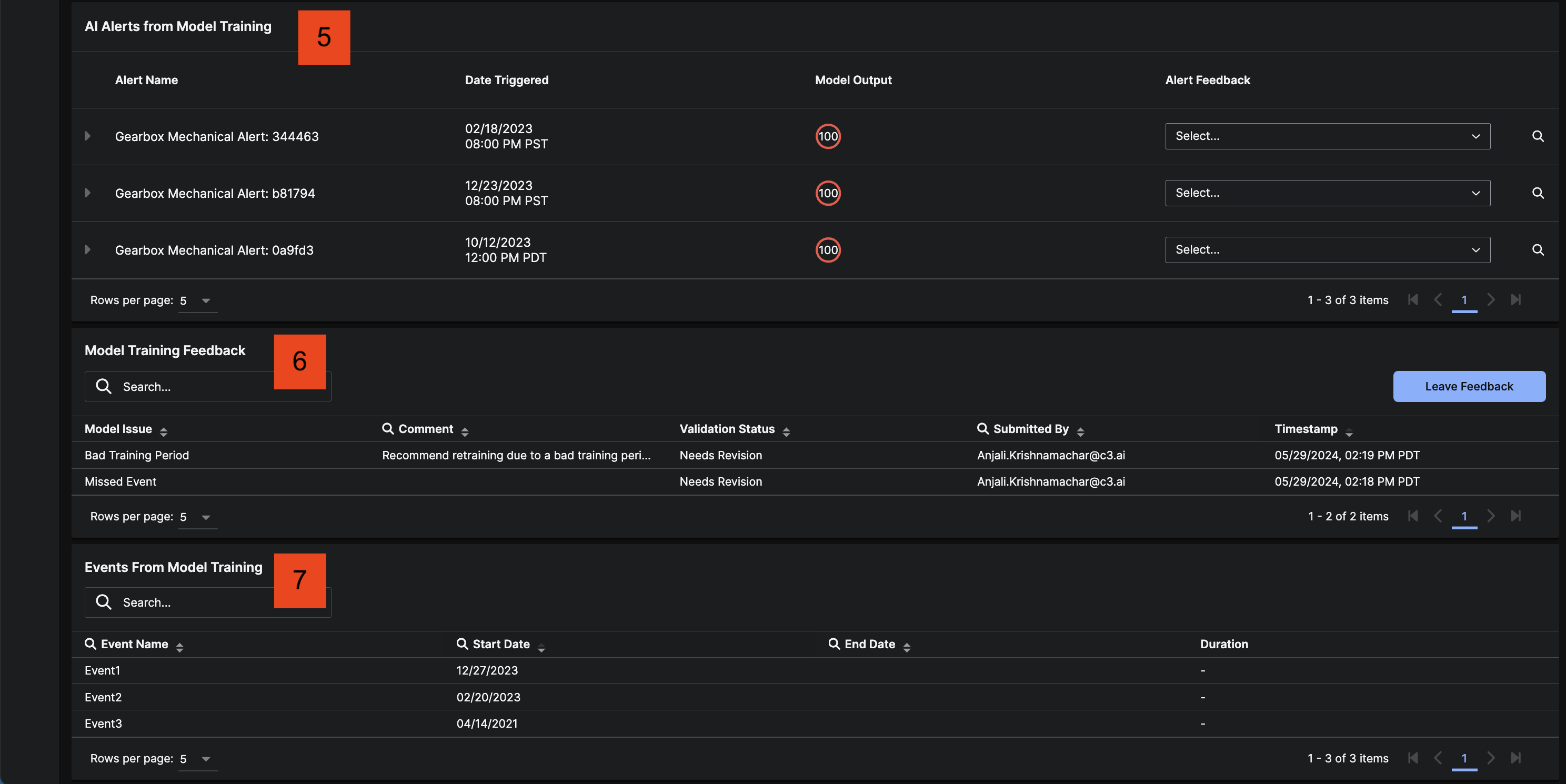

Model Validation

The Model Validation tab contains information to help review and analyze the training performance of the ML model. Reviewing this performance summary can help you determine if the model is ready to be deployed as “live” or if it needs to be re-configured or re-trained. This page contains:- Banner to Change Validation Status for this model.

- Training Summary with information about the training and validation time periods, alerts triggered during training, training classification, risk score distribution, and key model parameters for this model. You can also download the Model Validation Summary from the top right corner of this section.

- Features grid to click on individual features to plot them on the Training Performance Chart

- Training Performance Chart that displays Training Risk Score, Validation Risk Score, triggered alerts, masks, and any plotted features.

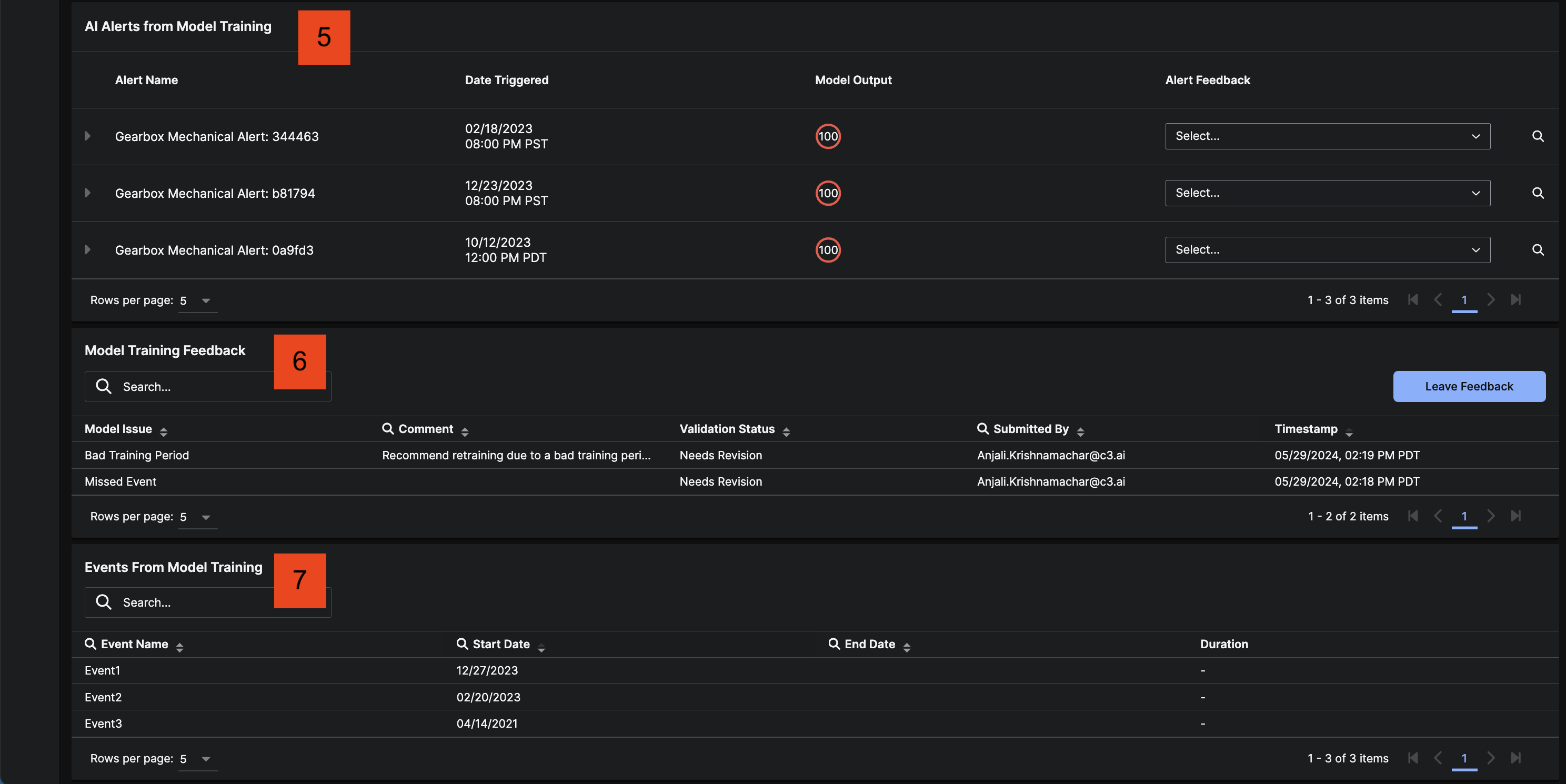

- AI Alerts from Model Training grid that expands to show feature contribution percentages for each alert. You can also provide Alert Feedback and categorize each alert.

- Model Training Feedback grid that displays comments and logs changes to the Validation Status.

- Events from Model Training grid that shows all recorded events from the training and validation datasets.

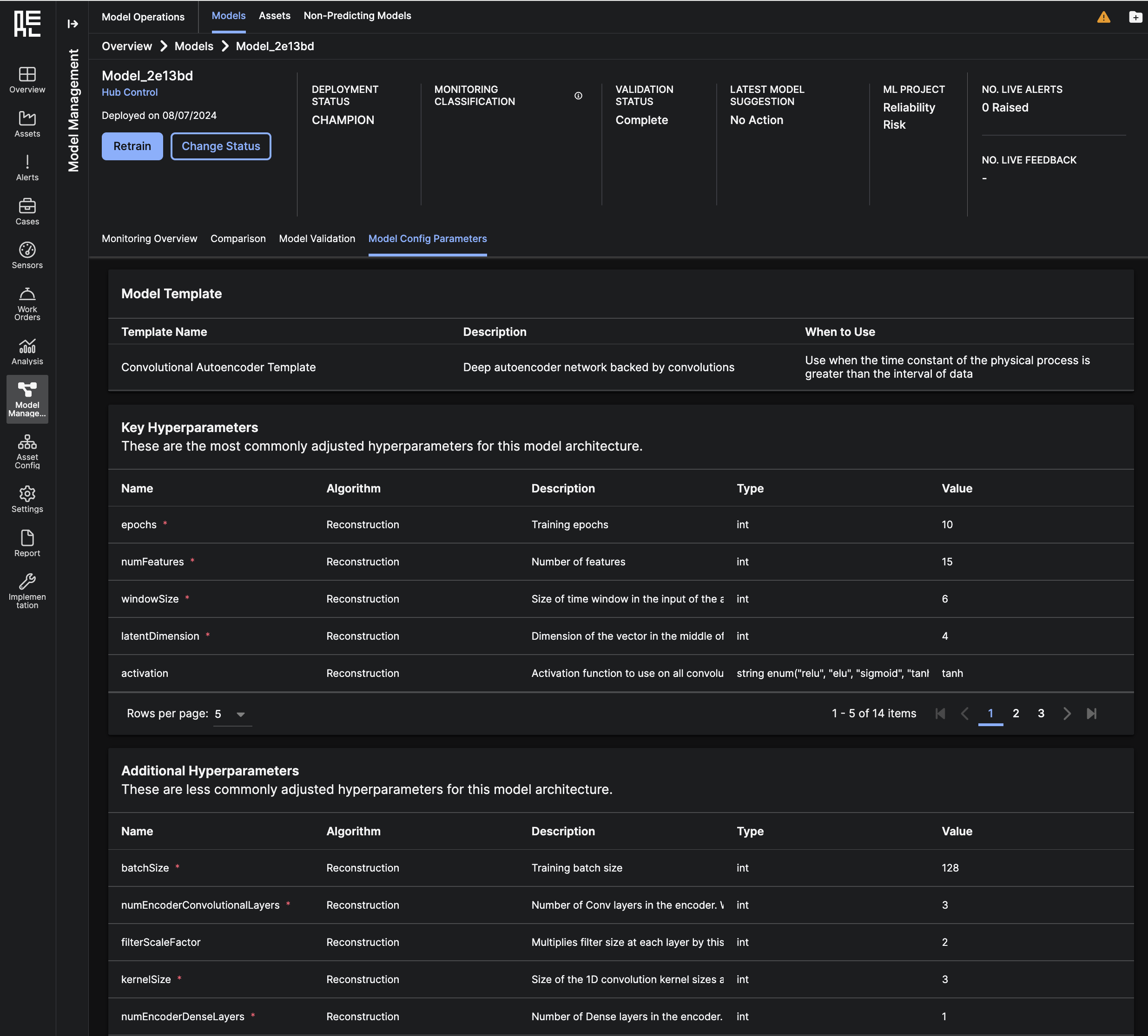

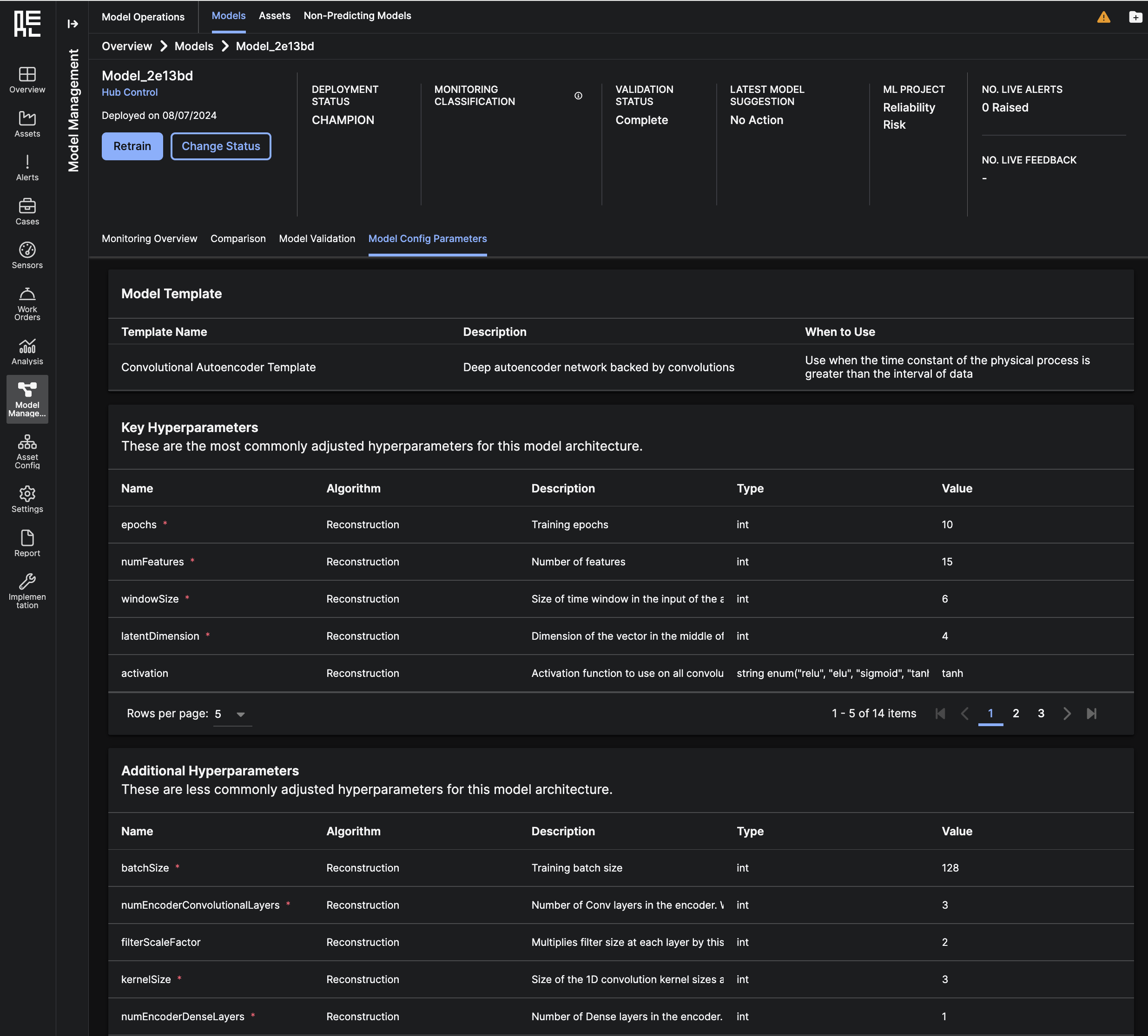

Model Config Parameters

The Model Config Parameters tab provides a reference to all of the parameters that were used to create this ML model. You can view the model’s template and hyperparameter values here.